Neural Networks as Cybernetic Systems – Part 1

3rd and revised edition

Department of Biological Cybernetics, Bielefeld University, Universitätsstraße 25, 33615 Bielefeld, Germanyurn:nbn:de:0009-3-19884

Keywords: cybernetics, neurosciences, models, simulation, systems theory, ANN, neural networks

The task of a neuronal system is to guide an organism through a changing environment and to help it to survive under varying conditions. These neuronal systems are probably the most complicated systems developed by nature. They provide the basis for a broad range of issues, ranging from simple reflex actions to very complex, and so far unexplained, phenomena such as consciousness, for example. Although, during this century, a great amount of information on neural systems has been collected, a deeper understanding of the functioning of neural systems is still lacking. The situation appears to be so difficult that some skeptics speak of a crisis of the experimentalist. They argue that to continue to accumulate knowledge of further details will not help to understand the basic principles on which neural systems rely. The problem is that neuronal systems are too complicated to be understood by intuition when only the individual units, the neurons, are investigated. A better understanding could be gained, however, if the work of the experimentalist is paralleled and supported by theoretical approaches. An important theoretical tool is to develop quantitative models of systems that consist of a number of neurons. By means of proposing such models which, by definition, are simplified versions or representations of the real systems, comprehension of the properties of the more complicated, real systems might be facilitated.

The degree of simplification of such models or, in other words, the level of abstraction may, of course, be different. This text gives an introduction to two such approaches that will enable us to develop such models on two levels. A more traditional approach to developing models of such systems is provided by systems theory. Other terms of similar meaning are cybernetics or control theory. As the basic elements used for models in systems theory are known as filters (which will be described in detail later) the term filter theory is also used.

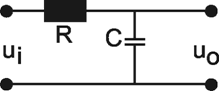

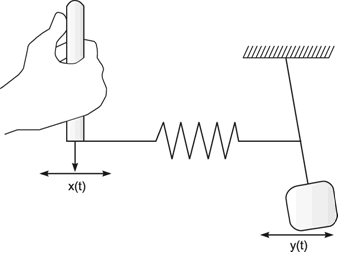

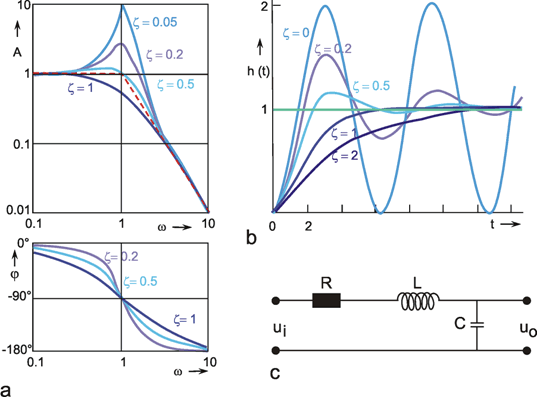

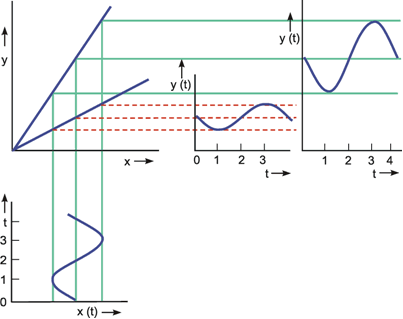

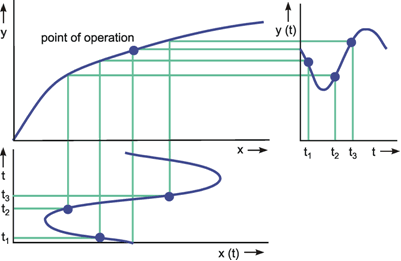

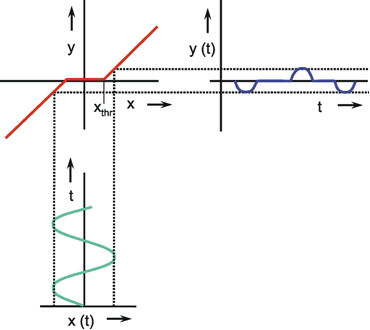

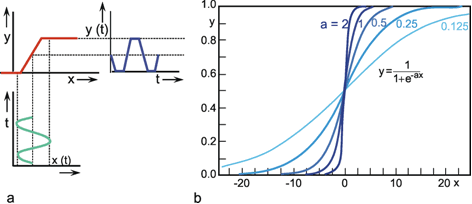

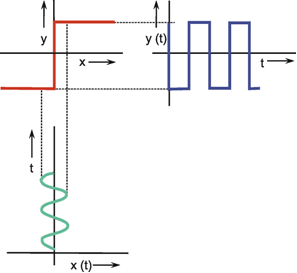

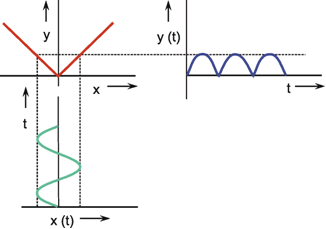

Systems theory was originally developed for electrical engineering. It allows us to consider a system on a very abstract level, to completely neglect the real physical construction, and to consider only the input-output properties of the system. Therefore, a system (in terms of systems theory) has to have input channels and output channels that receive and produce values. One important property is that these values can vary with time and can therefore be described by time functions. The main interest of the systems theory approach, therefore, concerns the observation of dynamic processes, i. e., the relation of time-dependent input and output functions. Examples of such systems could be an electrical system, consisting of resistors and capacitors as shown in Figure 1.1, or a mechanical system comprising a pendulum and a spring (Fig. 1.2). In the first case, input and output functions are the voltage values ui and uo. In the second case, the functions describe the position of the spring lever, x(t), and the pendulum, y(t), respectively. An example of a biological system would be a population, the size of which (output) depends on the amount of food provided (input). Examples of neuron-based systems are reflex reactions to varying sensory inputs. A classic example is the optomotor response that occurs in many animals and has been intensively investigated for insects by Hassenstein and Reichardt and co-workers in the 1950s and 60s (Hassenstein 1958 a, b, 1959: Hassenstein and Reichardt 1956; Reichardt 1957,1973).

Although the systems theory approach is a powerful tool that can also be applied to the investigation of individual neurons, usually it is used for model systems far removed from the neuronal level. However, earlier in the field of biological cybernetics, models were considered where the elements correspond to neuron-like ones, albeit extremely simplified. This approach has gained large momentum during the last 10 years, and is now known by terms such as artificial neural networks, massive parallel computation, or connectionist systems. Both fields, although sharing common interests and not included in the term cybernetics by accident, have developed separately, as demonstrated by the fact that they are covered in separate textbooks. With respect to the investigation of brain function, both fields overlap strongly, and differences are of quantitative rather than qualitative nature. In this text, an attempt is made to combine the treatment of both fields with the aim of showing that results obtained in one field can be applied fruitfully to the other, forming a common approach that might be called neural cybernetics.

Fig. 1.1 An example of an electric system containing an resistor R, and a capacitor C. ui input voltage, uo output voltage

Fig.1.2 An example of a mechanical system consisting of an inert pendulum and a spring. Input function x(t) and output function y(t) describe the position of the lever and the pendulum, respectively

One difference between the models in each field is that in typical systems theory models the information flow is concentrated in a small number of channels (generally in the range between 1 and 5), whereas in a neural net this number is high (e. g., 10 - 105 or more). A second difference is that, with very few exceptions, the neural net approach has, up to now, considered only the static properties of such systems. Since in biological neural networks the dynamic properties seem to be crucial, the consideration of static systems is, of course, a good starting point: but unless dynamics are taken into account, such investigations miss a very important point. This is the main subject of systems theory, but neural nets with dynamic properties do exist in the form of recurrent nets.

To combine both approaches, we begin with classic systems theory, describing properties of basic linear filters, of simple nonlinear elements and of simple recurrent systems (systems with feedback loops). Then, the properties of simple, though massively parallel, feed-forward networks will be discussed, followed by the consideration of massively parallel recurrent networks. The next section will discuss different methods of influencing the properties of such massively parallel systems by "learning." Finally, some more-structured networks are discussed, which consists of different subnetworks ("agents"). The important question here is how the cooperation of such agents could be organized.

All of these models can be helpful to the experimentalist in two ways. One task of developing such a model-be it a systems theory (filter) model or a "neuronal" model-would be to provide a concise description of the results of an experimental investigation. The second task, at least as important as the first, is to form a quantitative hypothesis concerning the structure of the system. This hypothesis could provide predictions regarding the behavior of the biological system in new experiments and could therefore be of heuristic value.

Modeling is an important scientific tool, in particular for the investigation of such complex systems as biological neural networks (BNN). Two approaches will be considered: (i) the methods of system theory or cybernetics that treat systems as having a small number of information channels and therefore consider such systems on a more abstract level, and (ii) the artificial neural networks (ANN) approach which reflects the fact that a great number of parallel channels are typical for BNNs.

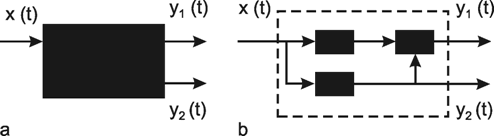

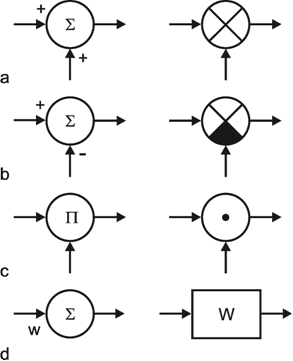

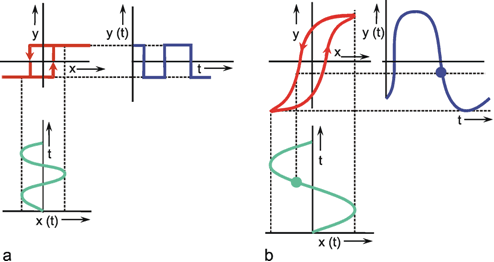

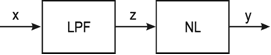

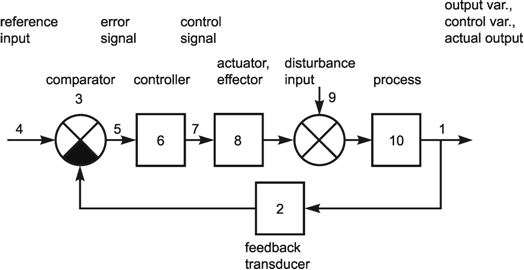

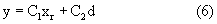

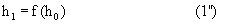

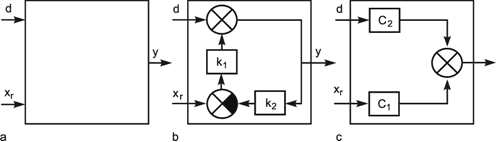

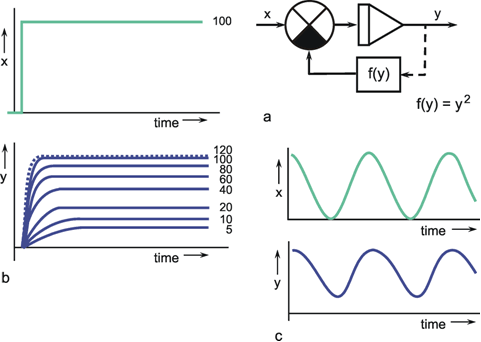

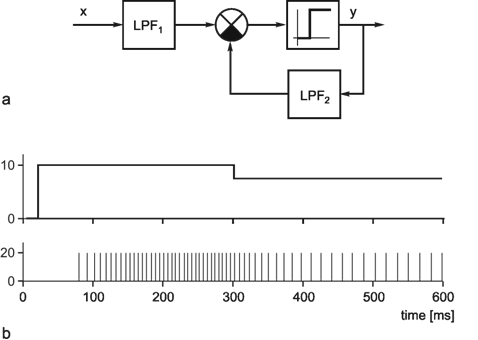

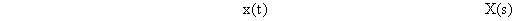

A system, in terms of systems theory, can be symbolized quite simply by a "black box" (Fig. 1.3a) with input and output channels. The input value is described by an input function x(t), the output value by an output function y(t), both depending on time t. If one was able to look inside the black box, the system might consist of a number of subsystems which are connected in different ways (e. g., Fig. 1.3b). One aim of this approach is to enable conclusions to be reached concerning the internal structure of the system. In the symbols used throughout this text, an arrow shows the direction of signal flow in each channel. Each channel symbolizes a value which is transported along the line without delay and without being changed in any way. A box means that the incoming values are changed. In other words, calculations are done only within a box. Summation of two input values is often shown by the symbol given in Fig. 1.4a (right side) or, in particular if there are more than two input channels, by a circle containing the summation symbol Σ (Fig. 1.4a, left). Figure 1.4b shows two symbols for subtraction. Multiplication of two (or more) input values is shown by a circle containing a dot or the symbol Π (Fig. 1.4c). The simplest calculation is the multiplication with a constant w. This is often symbolized by writing w within the box (Fig. 1.4d, right). Another possibility, mostly used when plotting massively parallel systems, is to write the multiplication factor w beside the arrowhead (Fig. 1.4d, left).

Fig. 1.3 Schematic representation of a system. (a) black box. (b) view into the black box. x(t) input function, y1(t),y2(t) output functions

Fig. 1.4 Symbols used for (a) summation, (b) subtraction, and (c) multiplication of two (or more) input values and (d) for multiplication with a constant value

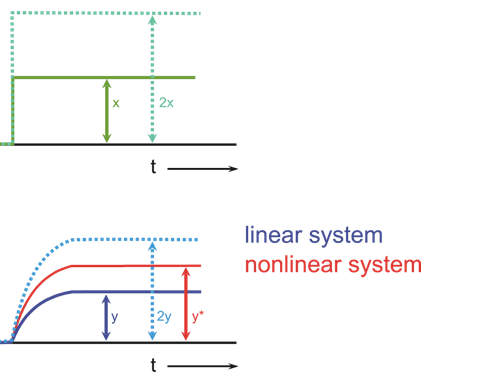

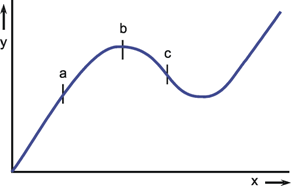

We can distinguish between linear and nonlinear systems. A system is considered linear if the following input-output relations are valid. If y1 is the output value belonging to the input value x1, and y2 that to the input value x2, the input value (x1 + x2) should produce the output value (y1 + y2). In other words, this means that the output must be proportional to the input. If a system does not meet these conditions, we have a nonlinear system.

This will be illustrated in Figure 1.5 by two selected examples. To begin with, Figure 1.5a shows the response to a step-like input function. If the input function rises sharply from the value zero to the value x, within this system the output function reaches the value y after a certain temporal delay. If the amplitude of the input function is doubled to 2 x, the values of the output function must be doubled, too, in case of a linear system (Fig. 1.5b, continuous line). If, however, the output function (y* ≠ 2y) shown in Figure 1.5b with a dashed line were achieved, it would be a nonlinear system.

Nonlinear properties occur, for example, if two channels are multiplied or if nonlinear characteristics occur within the system (see Chapter 5 ). Although the majority of all real systems (in particular, biological systems) are nonlinear in nature, we shall start with linear systems in Chapters 3 and 4 . This is due to the fact that, so far, only linear systems can be described in a mathematically closed form. To what extent this theory of linear systems may be applied when investigating a real (i. e., usually nonlinear) system, must be decided for each individual case. Some relevant examples are discussed in Chapters 6 and 7 . Special properties of nonlinear systems will be dealt with in Chapter 5 .

Although biological systems are usually nonlinear in nature, linear systems will be considered first because they can be understood more easily.

Fig. 1.5 Examples of step responses of (a) a linear and (b) a nonlinear system. x (t) input function, y (t) output function

The first task of a systems theory investigation is to determine the input-output relations of a system, in other words, to investigate the overall properties of the box in Figure 1.3 a. For this so-called "black box" analysis (or input-output analysis, or system identification) certain input values are fed into the system and then the corresponding output values are measured. For the sake of easier mathematical treatment, only a limited number of simple input functions is used. These input functions are described in more detail in Chapter 2 . In Figure 1.3b it is shown that the total system may be broken down into smaller subsystems, which are interconnected in a specific way. An investigation of the input-output relation of a system is thus followed immediately by the question as to the internal structure of the system. The second task of systems-theory research in the field of biology in thus to find out which subsystems form the total system and in what way these elements are interconnected. This corresponds to the step from Figure 1.3a to Figure 1.3b. In this way, we can try to reduce the system to a circuit which consists of a number of specifically connected basic elements. The most important of these basic elements will be explained in Chapter 4 .

For the black box analysis, the system is considered to be an element that transforms a known input signal into an unknown output signal. Taking this input-output relation as a starting point, attempts are made to draw conclusions as to how the system is constructed, i. e., what elements it consists of and how these are connected. (When investigating technical systems, usually the opposite approach is required. Here, the structure of the system is known and it is intended to determine, without experiments, the output values which follow certain input values that have not yet been investigated. However, such a situation can also occur in biology. For example, a number of local mechanisms of an ecosystem might have been investigated. Then the question arises to what extent the properties of the complete system could be explained?)

If the total system has been described at this level, we have obtained a quantitative model of a real system and the black box analysis is concluded. The next step of the investigation (i. e., relating the individual elements of the model to individual parts of the real system) is no longer a task of systems theory and depends substantially on knowledge of the system concerned. However, the model developed is not only a description of the properties of the overall system, but also is appropriate to guide further investigation of the internal structure of the system.

The first task of the systems theory approach is to describe the input-output properties of the system, such that its response to any input function can be predicted. The next step is to provide conclusions with respect to the internal structure of the system.

As was mentioned before, there are only a few functions usually used as input functions for investigating a system. Five such input functions will be discussed below. The investigation of linear systems by means of each of these input functions yields, in principle, identical information about the system. This means that we might just as well confine ourselves to studying only one of these functions. For practical reasons, however, one or the other of the input functions may be preferred in individual cases. Furthermore, and more importantly, this fundamental identity no longer applies to nonlinear systems. Therefore, the investigation of nonlinear systems usually requires the application of more than one input function.

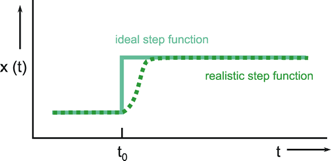

Figure 2.1 a shows a step function. Here, the input value-starting from a certain value (usually zero)-is suddenly increased by a constant value at time t = t0 and then kept there. In theoretical considerations, the step amplitude usually has the value 1; therefore this function is also described as unit step, abbreviated to x(t) = 1(t). The corresponding output function (the form of which depends on the type of system and which therefore represents a description of the properties of the system) is called accordingly step response h(t) or the transient function.

Fig. 2.1 The step function. Ideal (continuous line) and realistic version (dashed line)

The problem with using a step function is that the vertical slope can be determined only approximately in the case of a real system. In fact, it will only be possible to generate a "soft" trapezoidal input function, such as indicated in Figure 2.1 b. As a rule, the step is considered to be sufficiently steep if the rising movement of the step has finished before an effect of the input function can be observed at the output. This step function is, for example, particularly suitable for those systems where input values are given through light stimuli, since light intensity can be varied very quickly. In other cases, a fast change in the input function may risk damaging the system. This could be the case where, for example, the input functions are to be given in the form of mechanical stimuli. In this situation, different input functions should be preferred.

The response to a step function is called step response or transient function h(t).

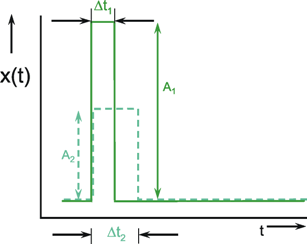

As an alternative to the step function, the so-called impulse function x(t) = δ(t) is also used as input function. The impulse function consists of a brief pulse, i. e., at the time t = t0 the input value rises to a high value A for a short time (impulse duration Δt) and then immediately drops back to its original value. This function is also called Dirac, needle, δ, or pulse function. Mathematically, it can be defined as A Δt = 1, where Δt approximates zero, and thus A is growing infinitely. Figure 2.2 gives two examples of possible forms of the impulse function.

Fig. 2.2 Two examples of approximations of the impulse function. A impulse amplitude, Δt impulse duration

It is technically impossible to produce an exact impulse function. Even a suitable approximation would, in general, have the detrimental effect that, due to very high input values, the range of linearity of any system will eventually be exceeded. For this reason, usually only rough approximations to the exact impulse function are possible.

As far as practical application is concerned, properties of the impulse function correspond to those of the step function. Apart from the exceptions just mentioned, the impulse function can thus be used in all cases where the step function, too, could prove advantageous. This, again, applies particularly to photo reactions, since flashes can be generated very easily.

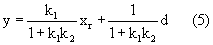

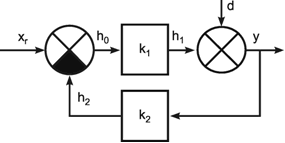

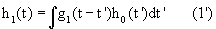

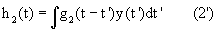

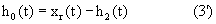

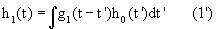

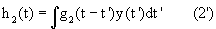

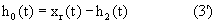

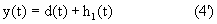

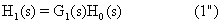

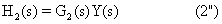

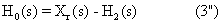

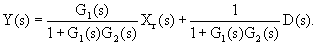

The response function to the impulse function is called impulse response or weighting function g(t). (In some publications, the symbols h(t) and g(t) are used in just the other way.) The importance of the weighting function in systems theory is that it is a means of describing a linear system completely and simply. If the weighting function g(t) is known, it will be possible to calculate the output function y(t) of the investigated system in relation to any input function x(t). This calculation is based on the following formula: (This is not to be proved here; only arguments that can be understood easily are given.)

y(t) = 0∫t g(t-t') x(t') dt'

This integral is termed a convolution integral, with the kernel (or the weighting function) g(t).

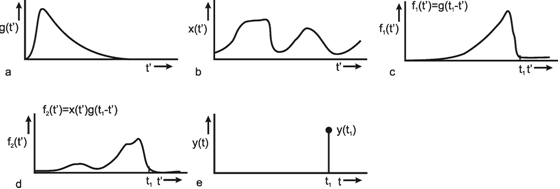

Figure 2.3 illustrates the meaning of this convolution integral. First, Figure 2.3a shows the assumed weighting function g(t') of an arbitrary system. By means of this convolution integral the intent is to calculate the response y(t) of the system to an arbitrary input function x(t') (Fig. 2.3b). (t and t' have the same time axis, which is shown with different symbols in order to distinguish between time t and the integration variable t'.)

Fig. 2.3 The calculation of the convolution integral. (a) The weighting function g(t) of the system. (b) An arbitrary input function x(t'). (c) The signal of the weighting function g(t') is changed to g(-t') and it is shifted by t1, leading to f1 = g(t1 - t'). (d) The product f2(t') of the input function x(t') and g(t1 - t'). (e) The integral y(t) of this function f2(t') at time t1

To begin with, just the value of the output function y(t1) at a specific point in time t1 will be calculated. As the weighting function quickly approaches zero level, those parts of the input function that took place some time earlier exert only a minor influence; those parts of the input function that occurred immediately before the point in time t1 still exert a stronger influence on the value of the output function. This fact is taken into account as follows: The weighting function g(t') is reflected at the vertical axis, g(-t'), and then shifted to the right by the value t1, to obtain g(t1-t'), which is shown in Figure 2.3c. This function is multiplied point for point by the input function x(t'). The result f2(t') = x(t')g(t1 - t') is shown in Figure 2.3d. Thus, the effect of individual parts of the input function x(t') on the value of the output function at the time t, has been calculated. The total effect is obtained by summation of the individual effects. For this, the function f2(t') is integrated from 0 to t1, i. e., the value of the area below the function of Figure 2.3d is calculated. This results in the value y(t1) of the output function at the point in time t1 (Fig. 2.3e).

This process should be carried out for any t, in order to calculate point for point the output function y(t). If the convolution integral can be solved directly, however, the output function y(t) can be presented in a unified form.

The relation between weighting function (impulse response), g(t), and step response h(t) is as follows: g(t) = dh(t)/dt; h(t) = 0∫t g(t') dt'. Thus, if h(t) is known, we can calculate g(t) and vice versa. As outlined above, in the case of a linear system we can obtain identical information by using either the impulse function or the step function.

The response to an impulse function is called impulse response or weighting function g(t). Convolution of g(t) with any input function allows the corresponding output function to be determined.

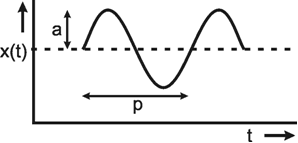

In addition to the step and impulse functions, sine functions are quite frequently used as input functions. A sine function takes the form x(t) = a sin 2πν t (Fig. 2.4). a is the amplitude measured from the mean value of the function. ν (measured in Hertz = s-1) is the frequency of the oscillation. The expression 2πν is usually summarized in the so-called angular frequency ω: x(t) = a sin ω t. The duration of one period is 1/ν.

Fig. 2.4 The sine function. Amplitude a, period p = 1/ν. The mean value is shown by a dashed line

Compared to step and impulse functions, the disadvantages of using the sine function are as follows: in order to obtain, by means of sine functions, the same information about the system as if we were using the step response or impulse response, we would have to carry out a number of experiments instead of (in principle) one single one; i. e., we would have to study, one after the other, the responses to sine functions of constant amplitude but, at least in theory, to all frequencies between arbitrary low and infinitely high frequencies. In practice, however, the investigation is restricted to a limited frequency range which is of particular importance to the system. Nevertheless, this approach requires considerably more measurements than if we were to use the step or impulse function. Moreover, we have to pay attention to the fact that, after the start of a sinusoidal oscillation at the input, the output function, in general, will build up. The respective output function can be analyzed only after this build-up has subsided. The advantage of using sine functions is that the slope of the input function, at least within the range of frequencies of interest, is lower than that of step functions, which allows us to treat sensitive systems with more care.

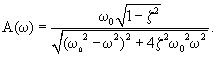

The response function to a sine function at the input is termed frequency response. In a linear system, frequency responses are sine functions as well. They possess the same frequency as the input function; generally, however, they differ in amplitude and phase value relative to the input function. In this case, the frequency responses thus take the form y(t) = A sin (ω t + ϕ). The two values to be measured are therefore the phase shift ϕ between input and output function as well as change of the amplitude value. The latter is usually given as the ratio between the maximum value of the output amplitude A and that of the input amplitude a, i. e., the ratio A/a. Frequently, the value of the input amplitude a is standardized to 1, so that the value of the output amplitude A directly indicates the relation A/a. In the following this will be assumed in order to simplify the notation.

Both values, output amplitude A as well as phase shift ϕ, depend on the frequency. Therefore, both values are measured with a constant input amplitude (a = 1), but different angular frequencies ω. If the output amplitudes are plotted against the angular frequency ω, the result will be the amplitude frequency plot A(ω) of the system (Fig. 2.5a). Usually, both amplitude and frequency are plotted on a logarithmic scale. As illustrated in Figure 2.5a by way of a second ordinate, the logarithms of the amplitude are sometimes given. The amplitude frequency plot shown in Figure 2.5a shows that the output amplitude with a medium frequency is almost as large as the input amplitude, whereas it gets considerably smaller for lower and higher frequencies. Very often the ordinate values are referred to as amplification factors since they indicate the factor by which the size of the respective amplitude is enlarged or reduced relative to the input amplitude. Thus, the amplification factor depends on the frequency. (It is called dynamic amplification factor, as is explained in detail in Chapter 5 ).

An amplification factor is usually defined as a dimensionless value and, as a consequence, can only be determined if input and output values can be measured on the same dimension. Since this is hardly ever the case with biological systems, we have sometimes to deviate from this definition. One possibility is to give the amplification factor in the relative unit such as decibels (dB). For this purpose, one chooses an optional amplitude value A0 as a reference value (usually the maximum amplitude) and calculates the value 20 Ig (A1/A0) for every amplitude A1. A logarithmic unit in Fig. 2.5a thus corresponds to the value 20 dB. In Figure 2.5a (right ordinate) the amplitude A0 = 1 takes the value 0 dB.

Fig. 2.5 The Bode plot consisting of the amplitude frequency plot (a) and the phase frequency plot (b). A amplitude, ϕ phase, ω = 2πν angular frequency. In (a) the ordinate is given as Ig A (far left), as A (left), and as dB (decibel, right)

In order to obtain complete information about the system to be investigated, we also have to determine changes in the phase shift between the sine function at the input and that at the output. By plotting the phase angle in a linear way versus the logarithm of the angular frequency, we get the phase frequency plot ϕ(ω) (Fig. 2.5b). A negative phase shift means that the oscillation at the output lags behind that of the input. (Due to the periodicity, however, a lag in phase by 270° is identical with an advance in phase by 90°). The phase frequency plot in Figure 2.5b thus means that in cases of low frequencies the output is in advance of the input by 90°, with medium frequencies it is more or less in phase, whereas at high frequencies it is lagging by about 90°.

Both descriptions, amplitude frequency plot and phase frequency plot, are subsumed under the term Bode plot. The significance of the Bode plot is dealt with in detail in Chapters 3 and 4 . We shall now briefly touch on the relationship between Bode plot and weighting function of a system. By means of a known weighting function g(t) of a system, it is possible to calculate, by application of the convolution integral, the sine response using the method explained in Chapter 2.2 :

y(t) = o∫t g(t-t') sin ω t' dt'.

The solution will be:

y(t) = A(ω) sin(ω t + ϕ(ω))

where A(ω) is the amplitude frequency plot and ϕ (ω) the phase frequency plot of the system (for proof, see e. g., Varju 1977 ). Thus, the sine function as an input function also gives us the same information about the system to be investigated as the step function or the impulse function.

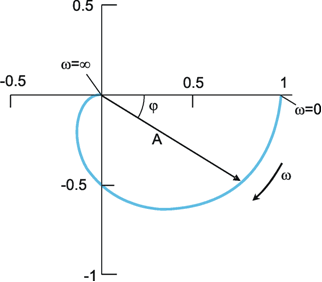

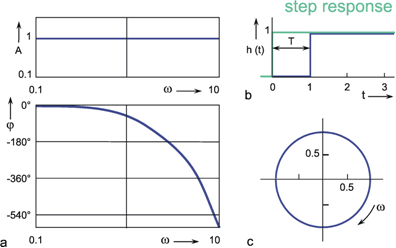

As an alternative to the Bode plot, amplitude and phase frequency can be indicated in the form of the Nyquist or polar plot (Fig. 2.6). Here, the amplitude A is given by the length of the pointer, the phase angle is given by the angle between the x-axis and pointer. The angular frequency ω is the parameter, i.e., ω passes through all values from zero to infinity from the beginning to the end of the graph. In the example given in Figure 2.6, the amplitude decreases slightly at first in the case of lower frequencies; with higher frequencies, however, it decreases very rapidly. At the same time, we observe a minor lag in phase of output oscillation as compared to input oscillation if we deal with lower frequencies. This lag is increases to a limit of -180°, however, if we move to high frequencies.

Fig. 2.6 The polar plot. A amplitude, ϕ phase, ω = 2πν angular frequency

The response to sine functions is called frequency response and is graphically illustrated in the form of a Bode plot or a polar plot.

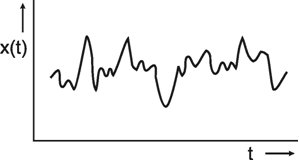

An input function used less frequently is the statistical or noise function. It is characterized by the fact that individual function values follow one another statistically, i.e., they cannot be inferred from the previous course of the function. Figure 2.7 shows a schematic example. Investigating a system by means of a noise function has the advantage over using sine functions insofar as in principle, again, just one experiment is required to characterize the system completely. On the other hand, this can only be done with some mathematical work and can hardly be managed without a computer. (To do this, we mainly have to calculate the cross-correlation between input and output function. This is similar to a convolution between input and output function and provides the weighting function of the system investigated). Another advantage of the noise function over the sine function is that the system under view cannot predict the form of the input function. This is of particular importance to the study of biological systems, since here, even in simple cases, we cannot exclude the possibility that some kind of learning occurs during the experiment. Learning would mean, however, that the system has changed, since different output functions are obtained before and after learning on the same input function. By using the noise function, learning activities might be prevented. Changes of the property of the system caused by fatigue (another possible problem when investigating the frequency response) generally do not occur, due to the short duration of the experiment. The statistical function is particularly suitable for the investigation of nonlinear systems because appropriate evaluation allows nonlinear properties of the system to be judged ( Marmarelis and Marmarelis 1978 ).

Fig. 2.7 An example of the statistical function

Fig. 2.8 The ramp function x(t) = kt (for t > t0)

Application of the statistical function is technically more difficult, both in experimental and in theoretical terms, but permits treatment of some properties of nonlinear systems.

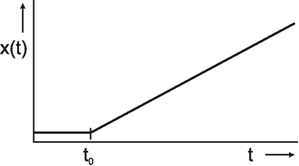

The last of the input functions described here is the ramp function x(t) = kt. The ramp function has a constant value, e. g., zero, for t ≤ -t0 and keeps rising with a constant slope k for t > t0 (Fig. 2.8). Whereas the sine response requires us to wait for the building-up process to end before we can start the actual measurement, the very opposite is true here. As will be explained later ( Chapter 4 ), interesting information can be gathered from the first part of the ramp response. This also holds for the step and impulse functions. However, a disadvantage of the ramp function is the following. Since the range of possible values of the input function is normally limited, the ramp function cannot increase arbitrarily. This means that the ramp function may have to be interrupted before the output function (the ramp response) has given us enough information. In this case, a ramp-and-hold function is used. This means that the ramp is stopped from rising any further after a certain input amplitude has been reached and this value is maintained.

The advantage of the ramp function lies in the fact that it is a means of studying, in particular, those systems that cannot withstand the strong increase of input values necessary for the step function and the impulse function. A further advantage is that it can be realized relatively easily from the technical point of view.

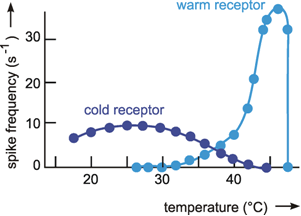

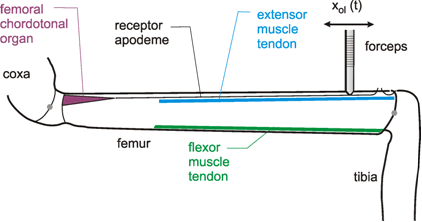

For the investigation of nonlinear systems, ramp function and step function (contrary to sine function and impulse function) have the advantage that the input value moves in one direction only. If we have a system in which the output value is controlled by two antagonists, we might be able to study both antagonistic branches separately by means of an increasing and (through a further experiment) a decreasing ramp (or step) function. In biology, systems composed of antagonistic subsystems occur quite frequently. Examples are the control of the position of a joint by means of two antagonistic muscles or the control of blood sugar level through the hormones adrenaline and insulin. At the input side, too, there may be antagonistic subsystems such as, for example, the warm receptors that measure skin temperature, and which cause the frequency of action potentials to increase with a rise in temperature, and the cold receptors, causing the frequency of action potentials to decrease with a lower outside temperature.

The ramp function is easy to produce but might have difficulties experimentally because of the limited range of input values.

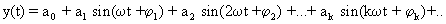

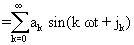

We cannot deal with the characteristics of simple elements in the next chapter until we have explained the significance of the Bode plot in more detail. For this reason we introduce here the concept of Fourier analysis. Apart from a number of exceptions that are of interest to mathematicians only, any periodic function y = f(t) may be described through the sum of sine (or cosine) functions of different amplitude, frequency and phase:

This is called a frequency representation of the function y(t) or the decomposition of the function to individual frequencies. The amplitude factors of the different components (ak) are called Fourier coefficients.

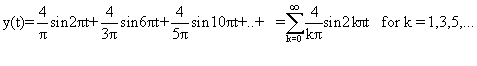

The concept of the Fourier decomposition can be demonstrated by the example of a square function (Fig. 3.1, yS). The Fourier analysis of this square function yields:

Frequency components that have an even numbered index thus disappear in this particular case. The first three components and their sum (y') have also been included in Figure 3.1.

Fig.3.1 Fourier decomposition of a square function ys. The fundamental y1 = 3/π sin 2πt, and the two harmonics y3 = 4/(3π) sin 6πt and y5 = 4/(5π) sin 10πt are shown together with the sum y’ = y1 + y3 + y5 (dotted line).

As we can see, a rough approximation to the square function has already been achieved by y'. As this figure shows, in particular for a good approximation of the edges, parts of even higher frequency are required. The component with the lowest frequency (y1 in Fig. 3.1) is called the fundamental, and any higher-frequency parts are referred to as harmonic components. In these terms we might say that the production of sharp edges requires high-frequency harmonic waves.

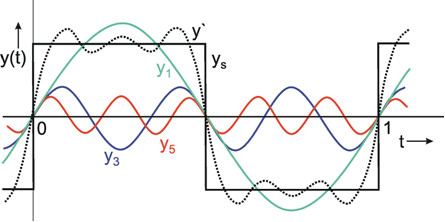

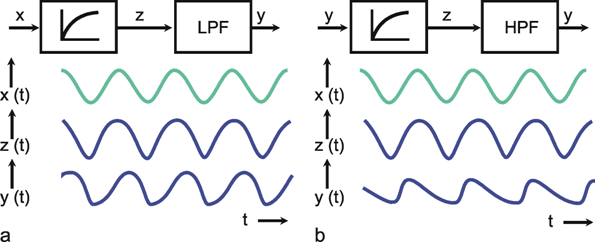

This concept permits an easy qualitative interpretation of a given amplitude frequency plot. As can be seen from its amplitude frequency plot shown in Figure 3.2a, a system which transmits low frequencies well and suppresses high frequency components affects the transmission of a square function insofar as, in qualitative terms, the sharp edges of this function are rounded off. As Figure 3.1 shows, high-frequency components are required for a precise transmission of the edges. If the amplitude frequency plot of the system looks like that shown in Figure 3.2b, i. e., low frequencies are suppressed by the system whereas high frequency components are transmitted well, we can get a qualitative impression of the response to a square wave in the following way. Providing we subtract the fundamental wave y1 from the square function ys which would occur where all frequencies are transmitted equally well, it would turn out that there would be a good transmission within the range of the edges and a poor transmission within the range of the constant function values, if the lower frequencies were suppressed. As a result, the edges would be emphasized particularly strongly.

Due to the fact that, in most systems (see, however, Chapter 10 ), different frequency components undergo different phase shifts which we have not discussed so far, these considerations are only of a qualitative nature. The quantitative transfer properties of some systems will be dealt with in Chapter 4 . It can already be seen, however, that the amplitude frequency plot allows us to make important qualitative statements concerning the properties of the system.

Fig. 3.2 Assume a system having a negligible phase shift and showing an amplitude frequency plot which transmits low frequencies like that of the fundamental y1 in Fig. 3.1 but suppresses higher frequencies like y3 or y5. A square wave as shown in Fig. 3.1 given at the input of this system would result in an output corresponding to the sine wave of y1. When the system is assumed to have a mirror-image-like amplitude frequency plot, (b), the response to the square wave input would look like the square wave without its fundamental. This is shown in (c)

We are now able to realize more clearly why investigation of a linear system by means of step or impulse functions requires, in principle, only one experiment to enable us to describe the system, whereas in the case of an investigation by means of sine functions we need to carry out, theoretically, an infinite number of experiments. The reason is that the edges of step and impulse functions already contain all frequency components. These have to be studied separately, one after another, if we use sine functions.

The Fourier analysis shows that sharp edges of a function are represented by high frequency components and that low frequencies are necessary to represent the flat, horizontal parts of the function.

So far we have only considered how to obtain information about the transfer properties and thus to describe the system to be investigated; now we have a further task: how to determine the internal structure of the system from the form of the Bode plot or the weighting function. This is done in the following way: we try to break down the total system into elements, called filters. Below we describe the properties of the most important filters. Subsequently, using an example based on the transfer properties of a real system, we will show how to obtain the structure of the system by suitably composing elementary filters.

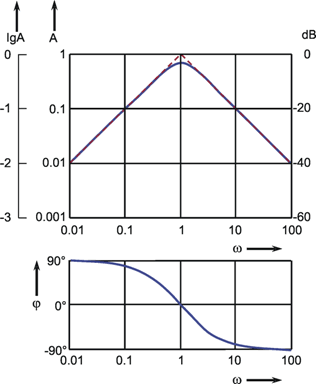

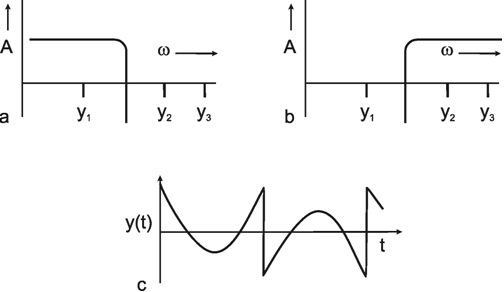

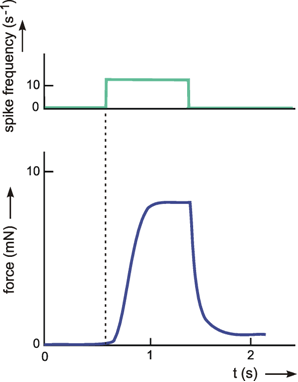

A low-pass filter allows only low frequencies to pass. As can be seen in the amplitude frequency plot of the Bode plot (Fig. 4.1a), low frequency sine waves are transmitted by the amplification factor 1; high frequency sine waves, however, are suppressed. The graph of the amplitude-frequency plot may be approximated by means of two asymptotes. One of those runs horizontally and in Figure 4.1 a corresponds to the abscissa. The second asymptote has a negative slope and is shown as a dashed line in Figure 4.1 a. If the coordinate scales are given in logarithmic units, this line has the slope -1. The intersection of both asymptotes marks the value of the corner frequency ω0, also called the characteristic frequency. At this frequency, the output amplitude drops to about 71 % of the maximum value reached with low frequencies. In the description chosen here, ω0 is set to 1. By multiplying the abscissa values with a constant factor, this Bode plot can be changed into that of a low-pass filter of any other corner frequency.

As the phase-frequency plot shows, there is practically no phase shift with very low frequencies; the phase-frequency plot approaches zero asymptotically. With very high frequencies, there is a lag in phase of the output oscillation, compared to the input oscillation, by a maximum of 90°. In the case of the corner frequency ω0 ( = 2πν0) the phase shift comes to just 45°.

Besides the sine function, the other important input function is given by the step function. The transient, or step response h(t) of this low-pass filter is shown in Figure 4.1 b. It starts at t = 0 with a slope of 1/τ and asymptotically approaches horizontal. The amplitude value of this line indicates the static amplification factor of the system, which has been assumed to be 1 in this example. This static amplification factor corresponds to the amplification factor for low frequencies which can be read off from the amplitude-frequency plot. The time constant τ of this low-pass filter can be read from the step response to be the time at which the response has reached 63% of the ultimate value, measured from time t = 0.The transition phase of the function is also referred to as the dynamic part and the plateau area as the static part of the response (see also Chapter 5 ). For a decreasing step, we obtain an exponential function with negative slope.

Here, the time constant indicates the time within which the exponential function e-1/τ from an amplitude value A has dropped to the amplitude value A/e, i. e., to about 37 % of the value A.

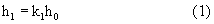

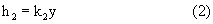

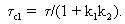

Corner frequency ω0 and time constant τ of this low-pass filter (and of the corresponding high-pass filter, which will be described in Chapter 4.2 ) are related in the following way:

.

.

Thus, the time constant of this low-pass filter can also be obtained from the Bode plot, to be τ = 1 s. (In the case of the Bode plot, and in all of the following figures in Chapter 4 , the response functions of filters for other corner frequencies and time constants can be obtained by expansion of the abscissa by an appropriate factor). The formula for the impulse response shown in Figure 4.1 b is only applicable for the range t > 0. For the sake of simplicity, this restriction will not be mentioned further in the corresponding cases that follow.

Fig. 4.1 Low-pass-filter. (a) Bode plot consisting of the amplitude frequency plot (above) and the phase frequency plot (below). Asymptotes are shown by red dashed lines. A amplitude, ϕ phase, ω = 2πν angular frequency, (b) step response, (c) impulse response, (d) ramp response. In (b)-(d) the input functions are shown by green lines. The formulas given hold for t > 0. k describes the slope of the ramp function x(t) = kt. (e) polar plot. (f) Electronic wiring diagram, R resistor, C capacitor, τ time constant, u1 input voltage, u0 output voltage

The easiest way to check whether a given function is an exponential one is to plot it on a semilogarithmic scale (with logarithmic ordinate and linear abscissa). This will result in a line with the slope -1/τ. Quite often the half-time or half-life is used as a measure of duration of the dynamic part instead of the time constant τ. It indicates the time by which the function has reached half of the static value. Given a filter of the first order (also true for the corresponding high-pass filter, see Chapter 4.2 ) the relation between halftime (HT) and time constant is as follows:

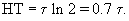

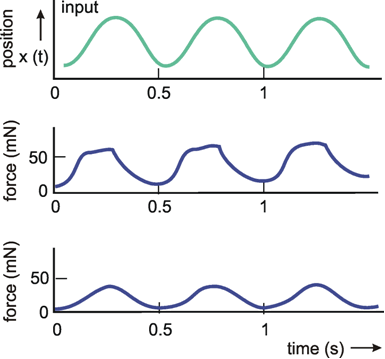

Because of the step response, a low-pass filter may be understood intuitively to be an element which though it tries to follow the input value proportionally, succeeds only after a temporal lag due to some internal sluggishness. Figure 4.2 shows an example of low-pass-like behavior. Here, the force produced by a muscle is shown when the frequency of action potentials within the relevant motoneuron has been changed.

The impulse response, i. e., the weighting function g(t) of this low-pass filter, is shown in Figure 4.1 c. If the input value zero has been constant for a long time, the output value is, at first, also zero. During the application of the impulse function at time t = 0 however, the output jumps to the value 1/ τ and then, starting with the slope -1/ τ2 decreases exponentially, with the time constant τ, to zero. The maximum amplitude 1/τ can be reached only if an ideal impulse function is used, however.

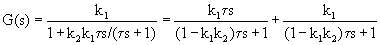

The weighting function of this lowpass filter is described by:

.

.

Figure 4.1 d shows the ramp response of a low-pass filter. It starts with a horizontal slope at the beginning of the ramp and asymptotically approaches a straight line that is parallel to the input function x(t) = kt. This line intersects the time axis at the value τ. For the sake of completeness, the Nyquist plot has also been given in Figure 4.1e. However, this will not be described in more detail here or in further examples, since it contains the same information given in the Bode plot, as we have already mentioned above.

Fig. 4.2 The response of an insect muscle to an increasing and a decreasing step function. The input function (upper trace) is given by the experimentally induced spike frequency of the motorneuron. The output function (lower trace) shows the force developed by the muscle (after Iles and Pearson 1971 )

Systems that possess low-pass characteristics can be found everywhere, since - for physical reasons - there is no system that is able to transmit frequencies with no upper limit. In order to investigate the properties of such a filter, it is most convenient to construct it of electronic components. The simplest electronic low-pass filter is shown in the wiring diagram in Figure 4.1 f. It consists of an ohmic resistor R and a capacitor C. The input function x(t), given as voltage, is clamped at ui, the output function y(t) is measured as voltage at u0. The time constant of this low-pass filter is calculated by τ = RC. Electronic circuits of this kind are also indicated accordingly for systems discussed later. Alternatively, a low-pass filter can be simulated on a digital computer as described in Appendix II .

A low-pass filter describes a system with sluggishness, i. e., one in which high frequency components are suppressed. Dynamic properties are described by the time constant τ or the corner frequency ω0.

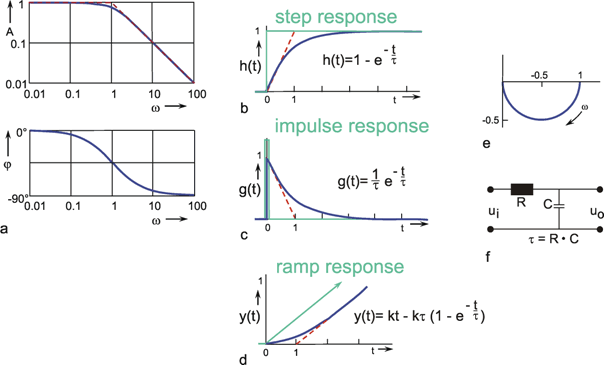

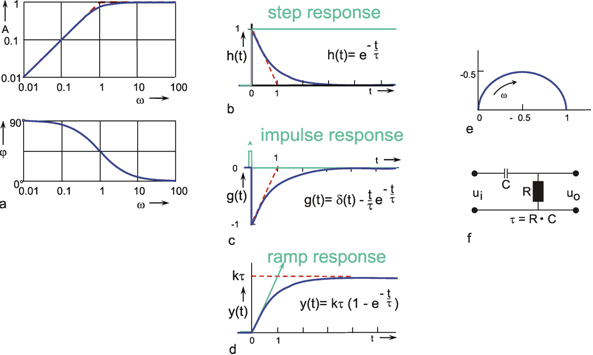

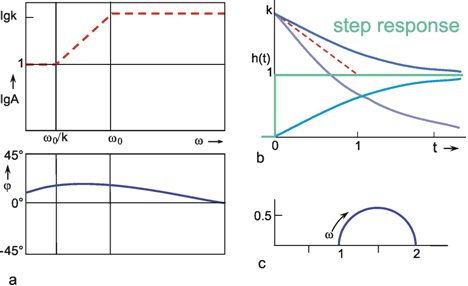

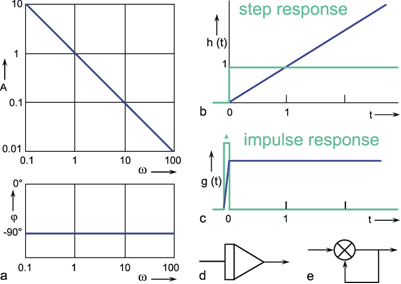

A high-pass filter permits only high frequencies to pass, while suppressing the low frequency parts. This is shown by the amplitude frequency, plot in Fig. 4.3a, which is the mirror image of tat of the low-pass filter (Fig. 4.1). When logarithmic coordinates are used, the asymptote along the descending branch of the amplitude frequency plot takes the slope + 1. As with the low pass filter, the corner frequency is indicated by the intersection of the two asymptotes. Similarly, the time constant is calculated according to τ = 1/ω0. Here though, the corner frequency indicates that those oscillations are permitted to pass whose frequencies are higher than the corner frequency, while oscillations whose frequencies are markedly lower than the corner frequency, are suppressed.

Fig. 4.3 High-pass filter. (a) Bode plot consisting of the amplitude frequency plot (above) and the phase frequency plot (below). Asymptotes are shown by red dashed. A amplitude, ϕ phase, ω = 2πν angular frequency. (b) step response, (c) impulse response, (d) ramp response. In (b) - (d) the input functions are shown by green lines. The formulas given hold for t > 0. k describes the slope of the ramp function x(t) = kt. (e) polar plot. (f) Electronic wiring diagram, R resistor, C capacitor, τ time constant, u1 input voltage, uo output voltage

As with the low-pass filter shown in Figure 4.1, the corner frequency is assumed to be ω0 = 1 s-1 and the time constant to be τ = 1 s. When examining the phase-frequency plot, we notice a phase lead of output oscillation compared to input oscillation by maximally + 90° for low frequencies. For the corner frequency it amounts to +45°, asymptotically returning to zero value for high frequencies.

The step response (Fig. 4.3b) clearly illustrates the qualitative properties of a high-pass filter. With the beginning of the step, the step response instantly increases to the value 1, and then, with the initial slope -1 /τ and the time constant τ, decreases exponentially to zero, although the input function continues to exhibit a constant value of 1. The maximum amplitude takes value 1 since, as can be gathered from the location of the horizontal asymptote of the amplitude-frequency plot, the amplification factor of this high-pass filter is assumed to be 1. The amplification factor in the static part of the step response (static amplification factor) is always zero. By contrast, the value to be taken from the horizontal asymptote of the amplitude-frequency plot is called maximum dynamic amplification factor kd. (See also Chapter 5 ).

As in the case of the low-pass filter, the impulse response is represented in Figure 4.3c by assuming a mathematically ideal impulse as input. The response also consists of an impulse that does not return to value zero, however, but overshoots to -1/τ, and then, with the time constant τ, exponentially returns to zero. The impulse response thus consists of the impulse function δ(t) and the exponential function -(1/τ)e-t/τ. With an impulse function of finite duration, the duration of the impulse response and the amplitude of the negative part increase accordingly. (For details, see Varju 1977 ).

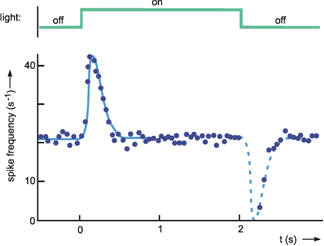

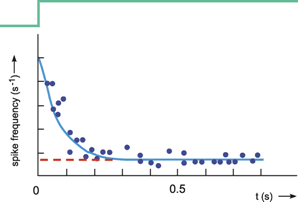

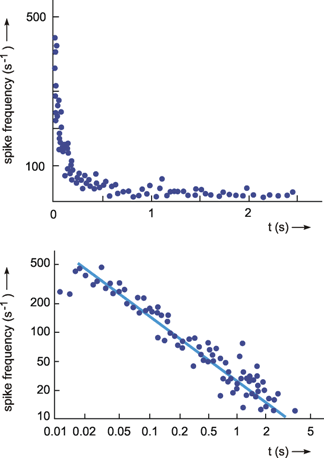

Whereas intuitively one could call a low-pass filter an inert element, a high-pass filter constitutes an element that merely responds to changes, but not to permanently constant values at the input. Such properties are encountered in sensory physiology, in the so-called tonic sensory cells, which respond in a low-pass manner, and in the so-called phasic sensory cells, which respond in a high-pass manner. The step response of such high-pass-like sensory cells is shown in Figure 4.4.

Fig. 4.4 The response of a single fiber of the optical nerve of Limulus. As input function light is switched of for 2 seconds (upper trace). The output function shows the instantaneous spike frequency (each dot is calculated as 1/Δt, Δt being the time difference between two consecutive spikes). The solid blue line shows an approximation of the data. The dashed part (response to the decreasing step) represents the mirror image of the first part of the approximation (after Ratliff 1965 ). Note that negative spike frequencies are not possible

This behavior in biological systems is frequently called adaptation. In mathematical terms, one could speak of a differentiating behavior since, especially with very short time constants, the first derivative of the input function is formed approximately by a high-pass filter.

The ramp response of this high-pass filter is shown in Figure 4.3 d. It starts at t = 0 and a slope k, the slope of the input function, and then, with the time constant τ, increases to the plateau value k τ. In order to obtain the time constant of the high-pass filter from the ramp response, the range of values available for the input function has to be sufficiently great such that the plateau at the output can be reached while the ramp is increasing. Conversely, with the given input range A, the slope k of the ramp has to be correspondingly small. If it is assumed that the plateau is reached approximately after the triple time constant, then k ≤ A/3τ.

Like those for the low-pass filter, Figure 4.3e illustrates the polar plot of the high-pass filter and Figure 4.3f an electronic circuit. This circuit also consists of an ohmic resistor R and a capacitor C, but in reverse order this time. The time constant is also calculated as τ = R C. For a digital simulation see Appendix II .

A high-pass filter describes a system which is only sensitive to changes in the input function. It suppresses the low frequency components, which qualitatively corresponds to a mathematical differentiation. The dynamic properties are described by the time constant r or the corner frequency ω0.

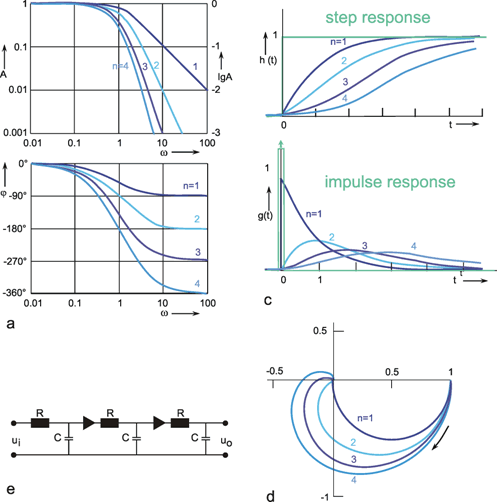

If n low-pass filters are connected in series, a low-pass of the n-th order is obtained. If two systems with specific amplification factors are connected in series, we obtain the amplification factor of the total system by multiplying the two individual amplification factors. As mentioned in Chapter 3 , the amplitude-frequency plot of a system reflects the amplification factors valid for the various frequencies. In order to obtain the amplitude-frequency plot of a 2nd order filter, it is thus necessary to multiply the amplitude values of the various frequencies (or, which is the same, add up their logarithms). This is why the logarithmic ordinate is used in the amplitude-frequency plot in the Bode plot: the amplitude frequency plot of two serially connected filters can be obtained simply by adding the amplitude frequency plots of the two individual filters, point by point if logarithms are used, as shown in the right hand ordinate of Figure 4.5 a. (See also Figure 2.5).

In addition to the amplitude frequency plot of a 1st order low-pass filter (already shown in Figure 4.1) Figure 4.5a shows the amplitude frequency plots of low-pass filters of the 2nd, 3rd, and 4th order, which are obtained by serially-connecting several identical 1st order low-pass filters. The corner frequency remains the same, but the absolute values of the slope of the asymptote at the descending part grows proportionately steeper with the order of the filter. This means that the higher the order of the low-pass filter, the more strongly high frequencies are suppressed. (Systems are conceivable in which the slope of the descending part of the amplitude frequency plot shows values that lie between the discrete values presented here. The system would then not be of an integer order. In view of the mathematical treatment of such systems, however, the reader is referred to the literature. (See e.g., Varju 1977 ).

If a sine wave oscillation undergoes a phase shift in a filter, and if several filters are connected in series, the total phase shift is obtained by linear addition of individual phase shifts. Since the value of the phase shift depends on the frequency, the phase frequency plot of several serially connected filters is obtained directly by a point-by-point addition of individual phase-frequency plots. For this reason, the phase frequency plot in the Bode plot is shown with a linear ordinate. Figure 4.5a shows that, at low frequencies, phase shifts are always near zero but for higher frequencies, they increase with the increasing order of the low-pass filter.

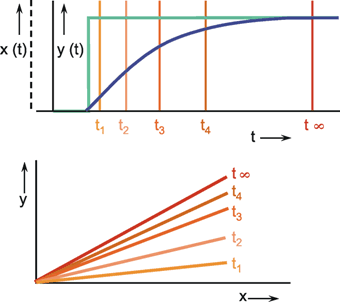

In the step responses (Fig. 4.5b), the initial slope decreases with an increasing order, although the maximum value remains constant (presupposing the same static amplitude factor, of course). In the impulse responses shown in Figure 4.5c, both the initial slope, and the maximal amplitude of the impulse response, decrease with increasing order of the low-pass filter. Generation of the form of an impulse response or a step response of a filter of a higher (e. g., 2nd) order can be imagined as follows. The response of the first filter to the impulse (or step) function at the same time represents the input function for the second filter. This, in turn, qualitatively influences the new input function in a corresponding way. In the case of low-pass filters, output functions of the second low-pass filter will thus be much flatter.

Fig. 4.5 Low-pass filter of nth order. (a) Bode plot, A amplitude, ϕ phase, ω angular frequency. (b) step response, (c) impulse response, (d) polar plot. n = 1 to 4 refers to the order of the filter. In (b) and (c) the input functions are shown by green lines. (e) Electronic wiring diagram for n = 3. The triangles represent amplifiers with gain 1, R resistor, C condensor, ui input voltage, uo output voltage

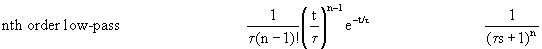

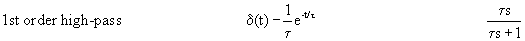

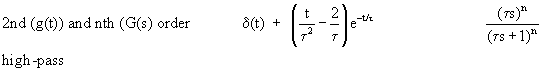

The formulas for impulse and step responses of low-pass filters of higher order are shown in the table in Appendix I . When a higher order filter is simulated by an electronic circuit, the individual filter circuits have to be connected via a high impedance amplifier to avoid recurrent effects. This is symbolized by a triangle in Figure 4.5e. Figure 4.5d contains the polar plots for low-pass filters of the 1st through the 4th order. The properties of low-pass filters which rely on recurrent effects are discussed in Chapter 4.9 .

The Bode plot of two serially connected filters is obtained by adding the logarithmic ordinate values of the amplitude frequency plot and by linear summation of the phase frequency plot. Low-pass filters of higher order show stronger suppression and greater phase shifts of high frequency components.

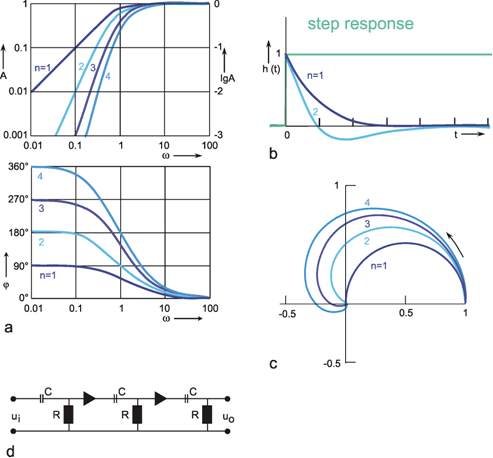

If n high-pass filters are serially connected, the result is called a n-th order high-pass filter. The Bode plot (Fig. 4.6a) and polar plot (Fig. 4.6c) will be mirror images of the corresponding curves of low-pass filters shown in Figure 4.5. Here, too, the value of the corner frequency remains unchanged, whereas the slope of the asymptote approaching the descending part of the amplitude frequency plot increases proportionate to the order of the high-pass filter. For very high frequencies, the phase frequency plots asymptotically approach zero. By contrast, for low frequencies, the phase lead is the greater, the higher the order of the high-pass filter.

Fig. 4.6 High-pass filter of nth order. (a) Bode plot, A amplitude, ϕ phase, ω angular frequency. (b) step response, (c) polar plot. n = 1 to 4 refers to the order of the filter. In (b) the input function is shown by green lines. (b) Electronic wiring diagram for n = 3.The triangles represent amplifiers with gain 1, R resistor, C condensor, ui input voltage, uo output voltage

In addition to the step response of the 1st order high-pass filter (already shown in Figure 4.3 c) Figure 4.6b shows the step response of the 2nd order high-pass filter. After time T, this crosses the zero line and then asymptotically approaches the abscissa from below. The corresponding formula is given in the table in Appendix I . The origin of this step response can again be made clear intuitively by taking the step response of the first 1st order high-pass filter as an input function of a further 1st order high-pass filter. The positive slope at the beginning of this new input function results in a positive value at the output. Since the input function subsequently exhibits a negative slope, the output function will, after a certain time (depending on the value of the time constant), take negative values, eventually reaching zero, as the input function gradually turns into a constant value.

In a similar way, it can be imagined that the step response of a 3rd order high-pass filter, which is not shown here, crosses the zero line a second time and then asymptotically approaches the abscissa from the positive side. Figure 4.6d shows an electronic circuit with the properties of 3rd order high-pass filter as an example.

High-pass filters of higher order show stronger suppression and greater phase shifts of low-frequency components. Qualitatively, they can be regarded as to produce the nth derivative of the input function.

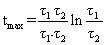

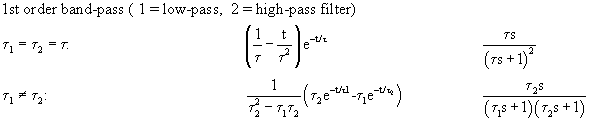

In practice, a pure high-pass filter does not occur since no system can transmit arbitrary high frequencies. Therefore, every real system endowed with high-pass properties also has an upper corner frequency, which is given by the ever-present low-pass properties. A "frequency band," which is limited on both sides, is thus transmitted in this case. We, therefore, speak of a band-pass filter. A band-pass filter is the result of a series connection of high- and low-pass filters. In linear systems, the serial order of both filters is irrelevant. Amplitude and phase frequency plots of a band-pass filter can be obtained by logarithmic or linear addition of the corresponding values of individual filters, as described in Chapter 4.3 .

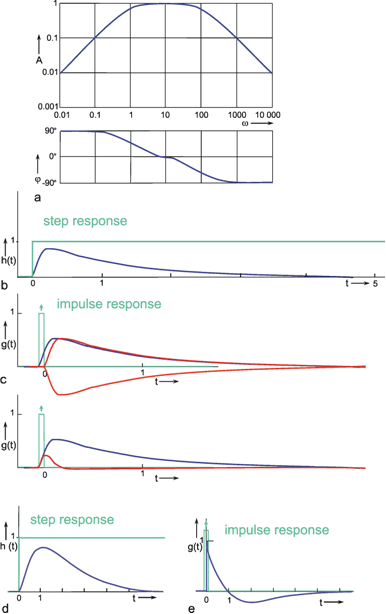

The Bode plot of a symmetrical band-pass filter (i. e., where the high- and low-pass filter are of the same order) is shown in Figure 4.7a. As can be seen from the slope of the asymptotes approaching the ascending and the descending parts of the amplitude frequency plot, the low-pass filter and the high-pass filter are both of 1st order. The corner frequency of the high-pass filter is 1 Hz, that of the low-pass filter 100 Hz in this example. Figure 4.7b, c show the step response and the impulse response of this system.

The amplitude frequency plot does not necessarily indicate the time constants or corner frequencies of each filter. As described in Chapter 4.1 and 4.2 , the values of the corner frequencies can be read from the intersection of the horizontal asymptote and the ascending asymptote (high-pass filter) or the descending asymptote (low-pass filter). A horizontal asymptote can only be plotted, however, if the corner frequency of the low-pass filter is sufficiently high compared to that of the high-pass filter, such that the amplitude frequency plot exhibits a sufficiently long, horizontal part. But if both corner frequencies are too similar, as in the example shown in Figure 2.5, or if the corner frequency of the low-pass filter is even lower than that of the high-pass filter, no statement is possible concerning the position of the horizontal asymptote on the basis of the amplitude frequency plot. (It should be stressed here that the amplification factor need not always be 1, although this has been assumed in examples used here, for the sake of clarity). In the band-pass filter shown in Figure 2.5, the upper and lower corner frequencies are identical in this particular case. As an illustration, the corresponding step response and the impulse response of this system are shown in Figure 4.7d, e.

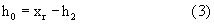

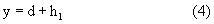

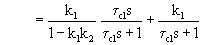

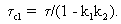

Contrary to the Bode plot, the step and the impulse responses of two serially connected filters cannot be computed as easily from the step or impulse responses of each filter. The corresponding formulas for this case, as for the general case of different time constants, are given in Appendix I . For computation of the step and impulse responses of symmetrical or asymmetrical bandpass filters of a higher order, the reader is also referred to Appendix l. For the case of a symmetrical 1st order band-pass filter of different time constants, i. e., τ1 (low-pass filter) ≠ τ2 (high-pass filter), the time when the step response reaches its maximum is given by

.

.

Fig. 4.7 Band-pass filter consisting of serially connected 1st order low-pass and high-pass filters. (a) Bode plot, A amplitude, ϕ phase, ω angular frequency, lower corner frequency (describing the high-pass properties): 1 Hz, upper corner frequency (describing the low-pass properties): 100 Hz. (b) Step response. (c) Impulse response. (d) and (e) Step and impulse responses of a band-pass filter whose upper and lower corner frequencies are identical (1 Hz). The Bode plot of this system is given in Fig. 2.5. In (b) to (e) the input functions are shown by green lines

A band-pass filter can be considered as serial connection of low-pass and high-pass filters. Its dynamics can be described by a lower and an upper corner frequency, marking a "frequency band.”

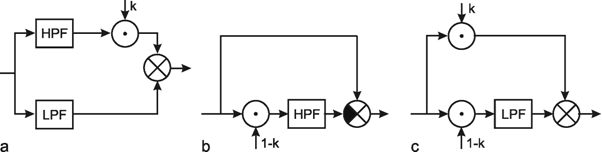

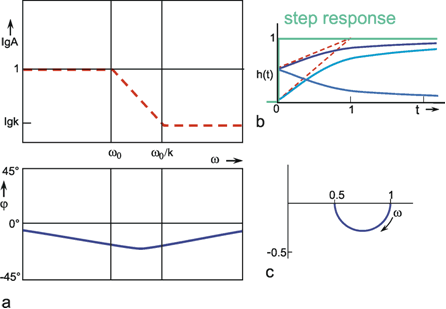

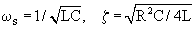

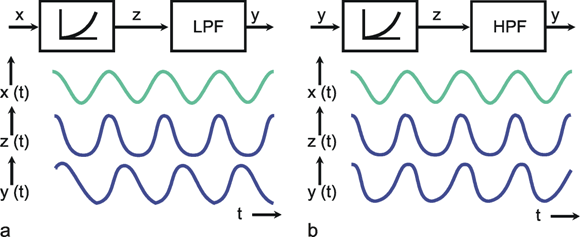

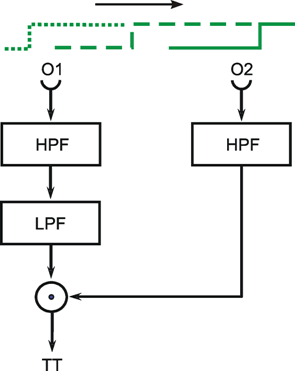

In the above section we have treated the basic types of filters and have shown how to connect them in series. Alternatively, filters might be connected in parallel. To discuss a simple example, we connect a low-pass filter and a high-pass filter with the same time constant in parallel. If the branch with the high-pass filter is endowed with an amplification k, and the outputs of both branches are added (Fig. 4.8a), we obtain different systems depending on the factor k. For the trivial case k = 0 the system corresponds to a simple low-pass filter.

Fig. 4.8 Three diagrams showing the same dynamic input-output behavior. (a) A parallel connection of a high-pass filter (HPF) and a low-pass filter (LPF). The high-pass branch is endowed with a variable gain factor k. (b) The corresponding system containing only a high-pass filter, and (c) only a low-pass filter

If k takes a value between 0 and 1, we obtain a lag-lead system. As we have seen in the series connection of two filters, the Bode plot of the total system can be computed from the individual Bode plots by simple addition, while the computation of the step response is more difficult (see Chapter 4.3 , 4.5 ). The opposite is true for parallel connection. Here, the step response of the total system can be computed by means of a point-by-point addition of the step responses of the two individual branches. Figure 4.9 b indicates the step responses of the two branches for the case k = 0.5 by dotted lines, and the sum of both functions, i. e., the step response of the total system, by a bold line. At the start of the input step, this function thus initially jumps to value k, and then, with the initial slope (1 - k)/τ and the time constant τ, asymptotically approaches the final value 1.

If the amplitude frequency plot of the total system is to be determined from that of each of the two branches, the correct result certainly will not be achieved by a point-by-point multiplication (logarithmic addition), as is possible in series connected filters. Nor does a linear addition produce the correct amplitude frequency plot of the total system. Since the oscillations at the output, responding to a particular sine function at the input, generally experience a different phase shift in each branch, (i. e., since their maxima are shifted relative to each other) a sine function is again obtained after addition. Because of this shift, the maximum amplitude of this function will normally be less than the sum of the maximum amplitudes of the two individual sine waves. In computation of the amplitude frequency plot of the total system, the corresponding phase shifts must, therefore, be taken into account.

For the same reasons, the phase frequency plot cannot be computed in a simple manner, either. However, a rough estimate is possible for specific ranges both of the amplitude and the phase frequency plots (Fig. 4.9a). Thus, for very low frequencies, the contribution of the high-pass filter is negligible. The amplitude and phase frequency plots of the total system will therefore approximate those of the low-pass filter. This means that the horizontal asymptote of the amplitude frequency plot is about 1, and that, starting from zero, the phase frequency plot tends to take increasingly negative values.

Conversely, for high frequencies, it is the contribution of the low-pass filter that is negligible. The amplitude and phase frequency plots of the total system thus approximate those of the high-pass filter, and the amplitude frequency plot is endowed, in this range, with a horizontal asymptote at the amplification factor of k = 0.5. The phase frequency plot again approaches zero value from below.

Fig. 4.9 The lag-lead system, i. e., a parallel connection of a low-pass filter and a high-pass filter with k < 1 (see Fig. 4.8a). (a) The amplitude frequency plot is only shown by the three assymptotes. (b) The step responses of the two branches for the case k = 0.5 are shown by light blue lines, and the sum of both functions, i. e. the step response of the total system, by a dark blue line. The input function is shown by green lines. (c) polar plot for k = 0.5

In the middle range, the phase frequency plot is located between that of the high-pass filter and that of the low-pass filter. In Figure 4.9a, the amplitude frequency plot is indicated only by its asymptotes (a widely used representation in the literature). The asymptote in the middle range exhibits the slope -1 on a logarithmic scale. The intersections of this asymptote with the two others occur at the frequencies w0 and w0/k. Its Nyquist plot is shown in Figure 4.9c.

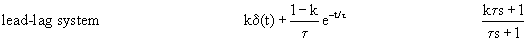

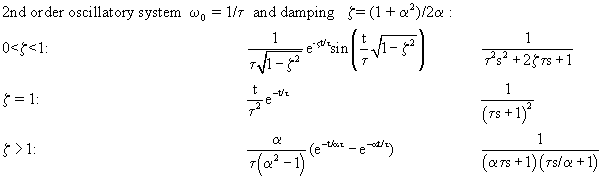

If the value of k is greater than 1, we speak of a lead-lag system. The step response is again obtained by a point-by-point addition of the low-pass response as well as of the correspondingly amplified high-pass response, shown in Figure 4.10b by dotted lines. As in the previous case, it immediately jumps to value k at the beginning of the step, and then, with the initial slope (1-k)/τ and the time constant τ, decreases to the final value 1.

The amplitude frequency plot of the lead-lag system is indicated in Figure 4.10a only by its three asymptotes, as in the case of the lag-lead system. Since the value of k is greater than 1, the horizontal asymptote in the range of high frequencies appears here above the corresponding asymptote in the range of low frequencies. The two intersections of the three asymptotes again lie at the frequencies ω0 and ω0/k, whereas the asymptote approaching the middle range exhibits the slope + 1 on a logarithmic scale.

Fig. 4.10 The lead-lag system, i. e., a parallel connection of a low-pass filter and a high-pass filter with k > 1 (see Fig. 4.8 a). (a) The amplitude frequency plot is only shown by the three asymptotes. (b) The step responses of the two branches for the case k = 2 is shown by light blue lines, and the sum of both functions, i. e., the step response of the total system, by a dark blue line. The input function is shown by green lines. (c) polar plot for k = 2

Fig. 4.11 Step response of a mammalian muscle spindle. When the muscle spindle is suddenly elongated (green line), the sensory fibers show a sharp increase in spike frequency which later drops to a new constant level which is, however, higher than it was before the step. Ordinate in relative units (after Milhorn 1966 )

In Chapter 3 , it was pointed out that an edge of a function is produced by high-frequency components. The large amplification factor in the high-frequency range that can be recognized in the amplitude frequency plot thus corresponds to the "over-representation" of the edge in the step response. On account of the larger amplification factor in the high-pass branch, the high-pass properties predominate to such an extent that the phase shift is positive for the entire frequency range. As can be expected in view of what has been discussed above, however, they asymptotically approach zero value, both for very high and very low frequencies. The corresponding Nyquist plot is shown in Figure 4.10c.

The behavior of a lead-lag system thus corresponds to that of the most widespread type of sensory cell, the so-called phasic-tonic sensory cell. Muscle spindles, for example, exhibit this type of step response (Fig. 4.11). (It should be noted, however, that there are also nonlinear elements which show similar behavior.)

If a lead-lag system is connected in series with a low-pass filter, the phasic part of the former can compensate for a considerable amount of the inert properties of the low-pass filter. This might be one reason for the abundancy of phasic-tonic sensory cells. (See also Chapter 8.6 ).

The system addressed here is described by the diagram shown in Figure 4.8a, but the same input-output relations are exhibited by the connection shown in Figure 4.8b. The origin of the responses of this system can be thought of as the high-pass response (which belongs to the input function and is multiplied by the factor 1-k), subtracted from the input function itself. The direct summation of the input onto the output dealt with here might be called "feed forward summation". The connection shown in Figure 4.8c also exhibits the same properties. Here, the input function, which is multiplied by the factor k, is added to the corresponding low-pass response, which is endowed with the factor (1-k).

To determine the transfer properties of filters connected in parallel, consideration of step responses is similar than for frequency responses. Lag-lead and lead-lag systems are simple examples of parallel connected high-pass and low-pass filters. The lead-lag system corresponds to the so-called phasic-tonic behavior of many neurons, in particular sensory cells.

Especially in biological systems, a pure time delay is frequently observed, in which all frequencies are transmitted without a change of amplitude and with merely a certain time delay or dead time T. The form of the input function thus remains unchanged. The entire input function is shifted to the right by the amount T, however. Such pure time delays occur whenever data are transmitted with finite speed. This occurs, for example, in the transmission of signals along nerve tracts, or transport of hormones in the bloodstream.

Since the amplitudes are not influenced by a pure time delay, the amplitude frequency plot obtained is a horizontal line at the level of the amplification factor (Fig: 4.12a). The phase shift, on the other hand, takes increasingly negative values with increasing frequency such that ϕ(ω) = -ω T. The reason for this is that, compared to the oscillation period which decreases at higher frequencies, the constant dead time increases in relative terms. The value of dead time can be read from the phase frequency plot as follows. It corresponds to the oscillation period ν = ω/2π, in which a phase shift of exactly - 2π or - 360° is present. The phase frequency plot of a pure time delay thus, unlike that of the other elements discussed so far, does not approximate a finite maximum value. Rather, the phase shifts increase infinitely for increasing frequencies. The corresponding Nyquist plot is shown in Figure 4.12c. (Confusion may be caused by the fact that, in some textbooks, the oscillation period T = 1/ν, the dead time (in this text), as well as the time constant, may be referred to by the letter T.)

An electronic system endowed with the properties of a pure dead time requires quite a complicated circuit. However, it can be simulated very simply using a digital computer. (See Appendix II ).

Fig. 4.12 Pure time delay. (a) Bode plot, A amplitude, ϕ phase, ω = 2πν angular frequency. (b) step response, the input function is shown by green lines, T dead time. (c) polar plot

A pure time delay or dead time unit does not influence the amplitudes of the frequency components but changes the phase values the more the higher the frequency.

An Asymmetrical Band-Pass Filter

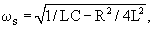

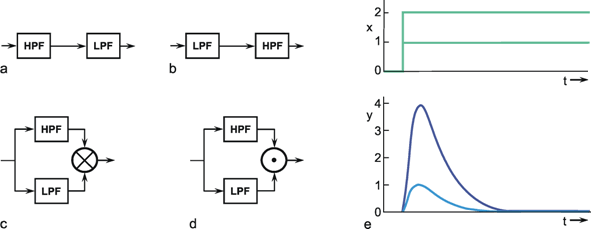

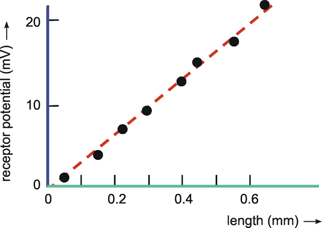

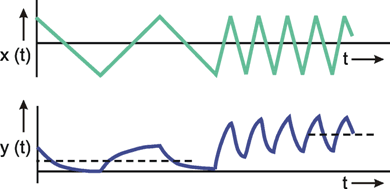

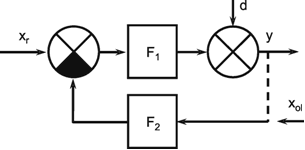

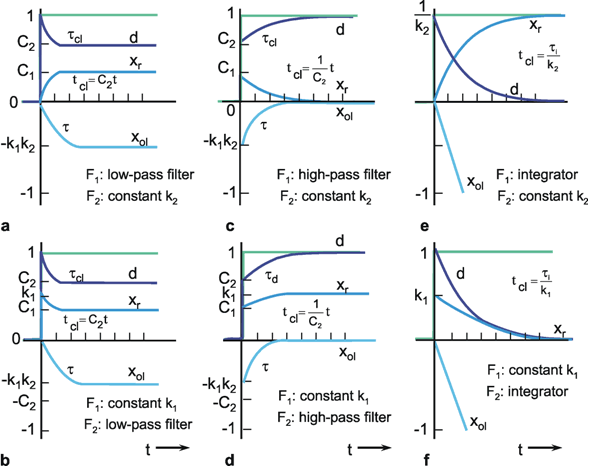

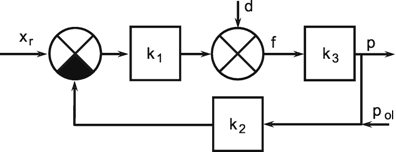

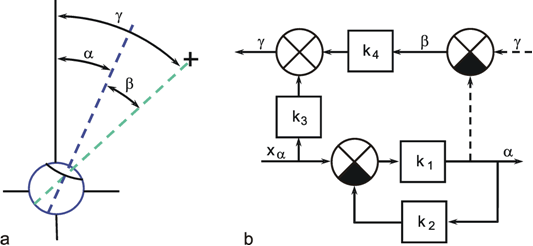

In the following experiment a visual reflex of an insect is investigated. A locust is held by the thorax and a pattern, consisting of vertical black and white stripes, is moved sinusoidally in horizontal direction in front of it ( Thorson 1966 ). According to the well-known optomotor response, the animal tries to follow the movement of the pattern by moving its head correspondingly. However, the head is fixed to a force transducer and the forces are measured by which it tries to move its head using its neck muscles. The amplitude and the phase shift of the sinusoidally varying forces are measured in dB (Fig. B1.1). How could this result be interpreted in terms of filter theory? Regarding the amplitude frequency plot, the slope of the asymptote for high frequency values (i. e., > 0.5 Hz) is approximately -1, indicating a 1st order low-pass filter. Note that 20 d8 correspond to one decade ( Chapter 2.3 ). The asymptote to the low frequency values is approximately 0.5 and thus has to be interpreted as a high-pass filter of 0.5th order. Thus, as a first interpretation we could describe the system as a serial connection of a 1st order low-pass filter and a 0.5th order high-pass filter. Does the phase frequency plot correspond to this assumption? A high-pass filter of 0.5th order should produce a maximum phase shift of 45° for low frequencies, as was actually found. For high frequencies, a 1 st order low-pass filter should lead to a maximum phase shift of -90°. However, a somewhat larger phase shift is ob served. Thus, the results could only be described approximately by a band-pass filter which consists of a 1st order low-pass filter and a 0.5th order high-pass filter. The larger phase shifts could be caused by a pure time delay. The step response revealed a dead time of 40 ms. For the frequency of 1 Hz this leads to an additional phase shift of about 15° and for 2 Hz to a phase shift of about 30°. The phase shifts produced by such a pure time delay for the three highest frequencies used in the experiment are shown by open circles. These shifts correspond approximately to the differences between the experimental results and the values expected for the band-pass filter. Thus, the Bode plot of the system can reasonably be described by an asymmetrical band-pass filter and a pure time delay connected in series.

Fig. B 1.1 Bode plot of the forces developed by the neck muscles of a locust. Input is the position of a sinusoidally moved visual pattern. ν is stimulus frequency. The gain is measured in dB (after Thorson 1966 )

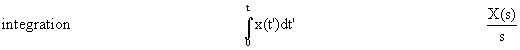

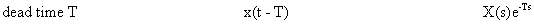

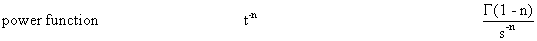

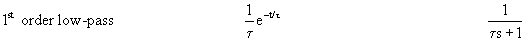

Whenever integration is carried out in a system (e. g., if, in a mechanical system, force (or acceleration) is to be translated into speed, or the latter is to be translated into position) such a process is described mathematically by an integrator. Within the nervous system, too, integration is performed. For example in the case of the semicircular canals, integrators have to ensure that the angular acceleration actually recorded by these sense organs, is transformed somewhere in the central nervous system into the angular velocity perceived by the subject.

The step response of an integrator is a line ascending along a slope τi from the beginning of the step (Fig. 4.13b). τi is called the integration time constant. It denotes the time that elapses before the response to a unit step reaches value 1. The "amplification factor" of an integrator is thus inversely proportional to τi (k = 1/τi) and hence takes the dimension s-1. In accordance with the properties of an integrator, the impulse response jumps to value 1 and remains there (Fig. 4.13c). The amplitude frequency plot of an integrator consists of a line descending along a slope -1 in the logarithmic plot. This means that the lower the input frequency, the more the amplitudes are increased, and that high frequency oscillations are considerably damped. The amplitude frequency plot crosses the line for the amplification value 1 at ω = 1/τi. For all frequencies, the phase shift is a constant -90°. Figure 4.13d shows the symbol normally used for an integrator.

An integrator might also be described by a system, the output of which does not solely depend on the actual input but also on its earlier state. This requires the capability of storing the earlier value. Therefore an integrator can also be represented by a unit with recurrent summation (Fig. 4.13e). This permits an easy way of implementing an integrator in a digital computer program (see Appendix II ).

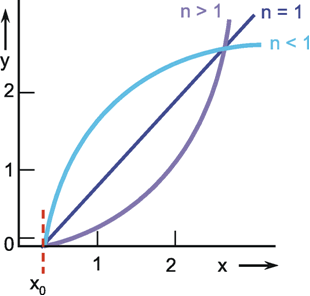

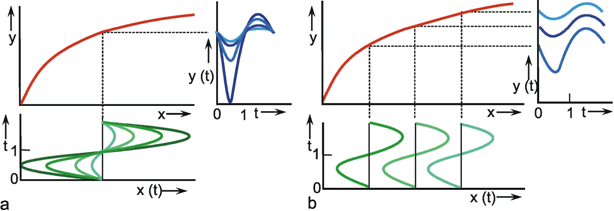

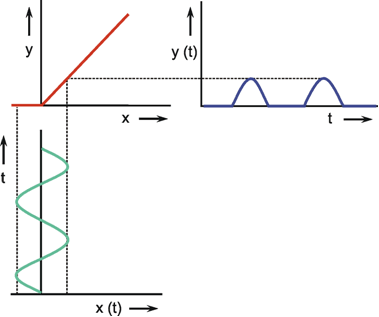

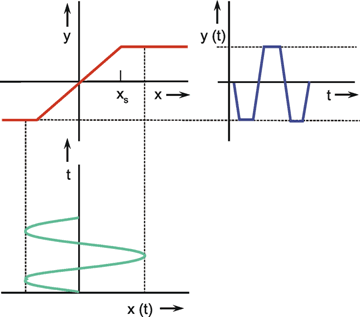

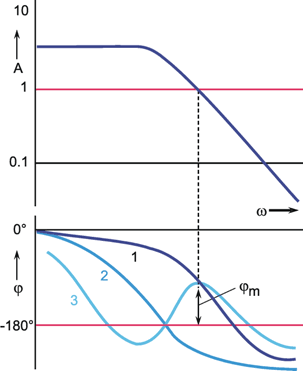

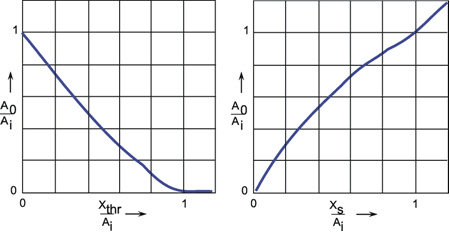

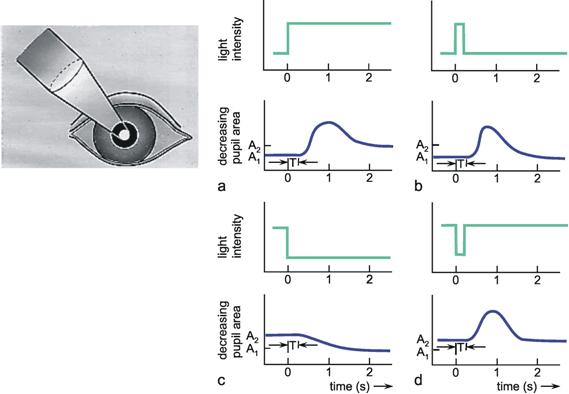

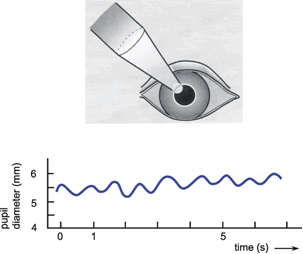

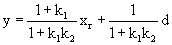

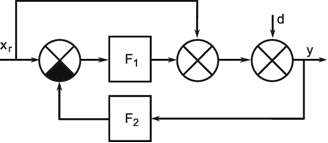

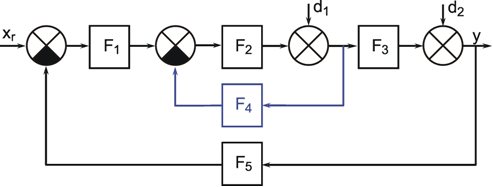

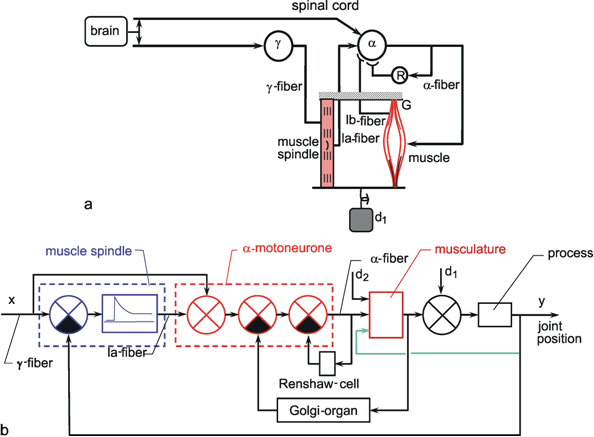

Fig. 4.13 Integrator. (a) Bode plot, A amplitude, ϕ phase, ω angular frequency. (b) step response. (c) Impulse response. In (b) and (c) the input functions are shown by green lines. (d) symbolic representation of an integrator. (e) An integrator can also be realized by a recurrent summation