The Role of Consciousness in Memory

*Stan Franklin, Computer Science Department, Institute for Intelligent Systems, The University of Memphis, Memphis, TN 38152, USA, Phone: (901) 678-3142, Fax: (901) 678-2480, franklin@memphis.edu

urn:nbn:de:0009-3-1505

Abstract. Conscious events interact with memory systems in learning, rehearsal and retrieval (Ebbinghaus 1885/1964; Tulving 1985). Here we present hypotheses that arise from the IDA computional model (Franklin, Kelemen and McCauley 1998; Franklin 2001b) of global workspace theory (Baars 1988, 2002). Our primary tool for this exploration is a flexible cognitive cycle employed by the IDA computational model and hypothesized to be a basic element of human cognitive processing. Since cognitive cycles are hypothesized to occur five to ten times a second and include interaction between conscious contents and several of the memory systems, they provide the means for an exceptionally fine-grained analysis of various cognitive tasks. We apply this tool to the small effect size of subliminal learning compared to supraliminal learning, to process dissociation, to implicit learning, to recognition vs. recall, and to the availability heuristic in recall. The IDA model elucidates the role of consciousness in the updating of perceptual memory, transient episodic memory, and procedural memory. In most cases, memory is hypothesized to interact with conscious events for its normal functioning. The methodology of the paper is unusual in that the hypotheses and explanations presented are derived from an empirically based, but broad and qualitative computational model of human cognition.

Keywords: Global Workspace, GWT, IDA, episodic memory, working memory, perceptual associative memory, procedural memory, availability heuristic, process dissociation, consciousness, cognition, model

In this paper we employ a theoretical methodology that is quite different from that which is currently standard in the experimental literature. Although the model is heavily based on experimental findings in cognitive psychology and brain science, there is only qualitative consistency with experiments. Rather, there are a large number of hypotheses derived from an unusual computational model of cognition, the IDA/GW model. The model is unusual in two significant ways. First, it functionally integrates a particularly broad swath of cognitive faculties. Second, it does not predict numerical data from experiments, but rather, is implemented as a software agent, IDA, that performs a real-world personnel task for the US Navy. The IDA/GW model generates hypotheses about human cognition by way of its design, the mechanisms of its modules, their interaction, and its performance. All of these hypotheses are, in principle, testable. With the advent of more sophisticated brain and behavioral assessment methods, some earlier hypotheses in this research program have been confirmed (Baars, 2002). We expect the current set of hypotheses to become directly testable with continuing improvements in cognitive neuroscience.

Every agent must sample and act on its world through a sense-select-act (or stimulus, cognition, response) cycle. The IDA/GW model hypothesizes for us humans a complex cognitive cycle, involving perception, several memory systems, attention and action selection, that samples the world at five to ten times a second. This frequent sampling allows for an exceptionally fine grained analysis of common cognitive phenomena such as process dissociation, recognition vs. recall, and the availability heuristic. At a high level of abstraction, these analyses, which are included in the paper, support the commonly held explanations of what is generally found in studies of the explicit (i.e., conscious and reportable) components of memory processes (e.g. Tulving, 1985; Baddeley et al, 2001). Nothing new here. At a finer grained level, however, our analyses flesh out these common explanations, adding detail and functional mechanisms. Therein lies the value of our analyses.

In addition, this paper employs the word “consciousness” or “conscious cognition” to indicate a general cognitive function, much as the word “memory” has come to be used. Conscious cognition is often labeled in many different ways in the empirical literature, including “explicit cognition,” “focal attention,” “awareness,” “strategic processing,” and the like. In the current approach we group all these specific terms under the umbrella of “conscious cognition,” as assessed by standard methods such as verifiable verbal report. GW Theory proposes a single underlying kind of information processing for conscious events, as implemented in the IDA model.

Current techniques for studying these phenomena at a fine grained level, such as PET, fMRI, EEG, implanted electrodes, etc., are still lacking either in scope, in spatial resolution, or in temporal resolution. PET and fMRI have temporal resolution problems, EEG is well-known to have localizability difficulties, and implanted electrodes (in epileptic patients), while excellent in temporal and spatial resolution, can only sample a limited number of neurons; that is they are limited in scope. As a result, many of our hypotheses, while testable in principle, seem difficult to test at the present time. Improved recording methods are emerging rapidly in cognitive neuroscience. When GW theory was first proposed, the core hypothesis of “global activation” or “global broadcasting” was not directly testable in human subjects. Since that time, however, with the advent of brain imaging, widespread brain activation due to conscious, but not unconscious, processes has been found in dozens of studies (see Baars, 2002; Dehaene, 2001). We expect further improvements to make our current hypotheses testable as well.

The IDA/GW model has unusual breadth, encompassing perception, working memory, declarative memory, attention, decision-making, procedural learning and more. This breadth may raise questions. How can such a broad model produce anything useful? The model suggests that superficially different aspects of human cognition are so highly integrated that they can’t be fully understood in a fragmentary manner. A more global view may provide an overview with surprising points of simplification. Our analyses of a number empirical phenomena below suggests the existence of common cognitive mechanisms across many tasks.

Human memory seems to come in myriad forms: sensory, procedural, working, declarative, episodic, semantic, long-term memory, long-term working memory and perhaps others. How to make sense of all of this? And to add to the difficulty, these terms are used differently in different research traditions. Psychologists tend to use these terms to refer inferentially to systems that appear to hold memory traces and to the underlying knowledge that constitutes their contents. To computer scientists and to neuroscientists, memory refers only to the physical (not inferred) storage device. Further, in many cognitive studies, consciousness is either taken for granted or labeled with its own set of synonyms such as explicit cognition, focal attention, and awareness. Yet the role of consciousness has concerned memory researchers since Ebbinghaus (1885/ 1964).

Because the question of the role of conscious cognition in memory is so broad, we attempt to approach it in a qualitative way, rather than trying to make precise, quantitative, but local, predictions (Broadbent 1958, Posner 1982).

The following empirical methods have been used to dissociate conscious and unconscious aspects of learning, memory storage and retrieval. They must therefore be accounted for by an adequate theory of the role of conscious cognition in memory.

-

Subliminal vs. supraliminal learning. Although subliminal acquisition of information appears to occur, the effect sizes are quite small compared to conscious learning. In a classic study, Standing (1973) showed that 10,000 distinct pictures could be learned with 96% recognition accuracy, after only 5 seconds of conscious exposure to each picture. No intention to learn was needed. Consciously learned educational material has been recalled after 50 years (Bahrick, 1984). No effect sizes nearly as long-lasting as these have been reported in the subliminal learning literature (Greenwald et al, 1996; Elliott & Dolan, 1998). Conscious access greatly facilitates most types of learning.

-

Process dissociation is one of the widely used techniques today to separate consciously-mediated and nonconscious components in memory retrieval (Jacoby 1991). In a typical example, subjects are asked to avoid reporting items that they have learned in a previous list (so-called exclusion instructions). Items that are nevertheless reported, in spite of the conscious aim of excluding them, are assumed to lack conscious access to the source of learning. That is, they are influenced by unconscious source knowledge. The probabilities of inclusion and exclusion conditions can be determined, and the degree of unconscious source knowledge can be estimated, given certain assumptions. In this fashion, conscious versus unconscious aspects of memorized lists can be dissociated.

-

Implicit learning. In this set of paradigms subjects are always asked to attend to (and therefore become conscious of) a set of stimuli. The organizing regularities among the attended stimuli – such as grammars that generated those stimuli – can be shown to be learned, even though people are not generally aware of them. Nevertheless, because the stimulus set is always conscious, conscious access appears to be a necessary condition, even for implicit learning of inferred rules and regularities. (Reber et al, 1991; Baars, 1988)

-

Recall vs. recognition. There is considerable evidence that people are conscious of retrieved memories in recall, but not necessarily in recognition tasks (e.g., Gardiner et al, 1998). For pioneering memory researchers like Ebbinghaus, indeed, the term “recall” meant retrieval to consciousness. The feeling of knowing that characterizes recognition is a “fringe conscious” phenomenon, that is, an event that has high accuracy but low reported conscious content (Baars, 2002). In numerous experiments, these differences result in striking dissociations between subjective reports in “remember” vs. “know” -types of retrieval.

-

Working memory. In cognitive working memory, the active operations of input, rehearsal, recall and report are conscious (Baddeley 1993). The contents of working memory prior to retrieval are not. A companion article by Baars & Franklin describes the way IDA, a large-scale model of GW theory, accounts for this evidence. (Baars & Franklin, 2003).

-

Availability heuristic. In decision making, people have a general tendency to prefer the first candidate solution that becomes conscious. This heuristic can lead to predictable patterns of errors (Kahneman, Slovic and Tversky 1982; Fiske and Taylor 1991). The IDA model can account for these patterns qualitatively.

-

Automaticity. Predictable skills tend to become more automatic, that is, less conscious, with practice (DeKeyser 2001). When such automatized skills repeatedly fail to accomplish their objectives, their details tend to become conscious again. The IDA model’s account of these processes is the subject of a separate article (Negatu, McCauley and Franklin, in review).

With its finer-grained model of these processes, the IDA model (Franklin 2000a, 2001; Franklin & Graesser 2001; Franklin 2002) of global workspace theory (Baars 1988, 1993, 1997, 2002) offers hypotheses that suggest a simple account of several forms of human memory and their relationships with conscious events. This account addresses each of the issues raised in the previous section (see below). With this article, we hope to entice experimentalists to test our hypotheses. (See also Baars and Franklin 2003.) Here are the major hypotheses:

-

The Cognitive Cycle: Recall William James’ claim that the stream of conscious thought consists of momentary “flights” and somewhat longer “perches” of dwelling on a particular conscious event. Such findings have been reported by neuroscientists (Halgren et al 2002, Fuster et al, 2000; Lehmann et al 1998; Freeman, 2003b) [1]. Much of human cognition functions by means of continual interactions between conscious contents, the various memory systems and decision-making. We call these interactions, as modeled in IDA, cognitive cycles. While these cycles can overlap, producing parallel actions, they must preserve the seriality of consciousness. The IDA model suggests therefore that conscious events occur as a sequence of discrete, coherent episodes separated by quite short periods of no conscious content (see also VanRullen and Koch 2003). It should be pointed out that the “flights and perches” of normal consciousness may involve numerous cognitive cycles. A problem-solving task involving inner speech, for example, may occur over tens of seconds or minutes, according to careful thought-monitoring studies.

-

Transient Episodic Memory: Humans have a content-addressable, associative, transient episodic memory with a decay rate measured in hours (Conway 2001). In our theory, a conscious event is stored in transient episodic memory by a broadcast from a global workspace. A corollary to this hypothesis says that conscious contents can only be encoded (consolidated) in long-term declarative memory via transient episodic memory.

-

Perceptual Memory: A perceptual memory, distinct from semantic memory but storing some of the same contents, exists in humans, and plays a central role in the assigning of interpretations to incoming stimuli. The conscious broadcast begins and updates the process of learning to recognize and to categorize, both employing perceptual memory.

-

Procedural Memory: Procedural skills are shaped by reinforcement learning, operating by way of conscious processes over more than one cognitive cycle.

-

Consciousness: Conscious cognition is implemented computationally by way of a broadcast of contents from a global workspace, which receives input from the senses and from memory (Baars 2002).

-

Conscious Learning: Significant learning takes place via the interaction of consciousness with the various memory systems (e.g. Standing 1973; Baddeley, 1993). The effect size of subliminal learning is therefore small compared to the learning of conscious events, but significant implicit learning can occur by way of unconscious inferences based on conscious patterns of input (Reber et al, 1991). In the GW/IDA view, all memory systems rely on conscious cognition for their updating, either in the course of a single cycle or over multiple cycles.

-

Voluntary and Automatic Attention: In the GW/IDA model, attention is defined as the process of bringing contents to consciousness. Automatic attention may occur unconsciously and without effort, even during a single cognitive cycle (Logan 1992). Attention may also occur voluntarily and effortfully in a conscious, goal-directed way, over multiple cycles.

-

Voluntary and Automatic Memory Retrievals: Associations from transient episodic and declarative memory are retrieved automatically and unconsciously during each cognitive cycle. Voluntary retrieval from these memory systems may occur over multiple cycles, governed by conscious goals.

Global workspace theory is a cognitive architecture with an explicit role for consciousness. It makes the following assumptions:

-

That the brain may be viewed as a collection of distributed specialized networks (processors);

-

That consciousness is associated with a global workspace – a fleeting memory capacity whose focal contents are widely distributed (“broadcast”) to many unconscious specialized networks;

-

That some unconscious networks, called contexts, shape conscious contents (for example, unconscious parietal maps of the visual field modulate feature cells needed for conscious vision);

-

That such contexts may work together to jointly constrain conscious events;

-

That motives and emotions can be viewed as part of goal contexts;

-

That executive functions work as hierarchies of goal contexts.

A number of these functions have plausible brain correlates, and the theory has recently gathered considerable interest from cognitive neuroscience and philosophy (see Table 1).

|

“Conscious contents provide the nervous system with coherent, global information.” |

Baars 1983 |

|

“Each specialized neural circuit enables the processing and mental representation of specific aspects of conscious experience, with the outputs of the distributed circuits integrated via a large-scale neuronal ‘workspace.’” (p. 161) |

Cooney & Gazzaniga 2003 |

|

“… time-locked multiregional retroactivation of widespread fragment records…can become contents of consciousness.” |

Damasio 1989 |

|

“We postulate that this global availability of information through the workspace is what we subjectively experience as the conscious state.” |

Dehaene & Naccache 2001 |

|

“Theorists are converging from quite different quarters on a version of the global neuronal workspace model of consciousness…” |

Dennett 2001 |

|

“When we become aware of something … it is as if, suddenly, many different parts of our brain were privy to information that was previously confined to some specialized subsystem. … the wide distribution of information is guaranteed mechanistically by thalamocortical and corticocortical re-entry, which facilitates the interactions among distant regions of the brain.” |

Edelman & Tononi 2000 |

|

“The activity patterns that are formed by the dynamics are spread out over large areas of cortex, not concentrated at points. Motor outflow is likewise globally distributed…. In other words, the pattern categorization (corresponds) to an induction of a global activity pattern.” |

Freeman in press |

|

“Evidence has been steadily accumulating that information about a stimulus complex is distributed to many neuronal populations dispersed throughout the brain.” |

John et al 2001 |

|

“… awareness of a particular element of perceptual information must entail not just a strong enough neural representation of information, but also access to that information by most of the rest of the mind/brain.” |

Kanwisher 2001 p.105 |

|

“… the thalamus represents a hub from which any site in the cortex can communicate with any other such site or sites. … temporal coincidence of specific and non-specific thalamic activity generates the functional states that characterize human (conscious) cognition.” |

Llinas et al 1998 |

|

“The high resting brain activity is proposed to include the global interactions constituting the subjective aspects of consciousness. “ |

Shulman et al 2003 |

|

“… the brain … transiently settling into a globally consistent state (is) the basis for the unity of mind familiar from everyday experience.” |

F. Varela et al 2001 p. 237 |

Table 1. Globalist views of consciousness (adapted from Baars, 2002)

We now turn to the software agent model of Global Workspace Theory (Baars 1988, 1997, 2002) that gave rise to the hypotheses listed above. IDA (Intelligent Distribution Agent) is an intelligent software agent (Franklin and Graesser 1997) developed for the US Navy (Franklin et al. 1998). At the end of each sailor's tour of duty, he or she is assigned to a new billet. This complex assignment process is called distribution. The Navy employs some 300 trained people, called detailers, full time to effect these new assignments. IDA facilitates this process by completely automating the role of the human detailer (Franklin 2001a). Communicating with sailors by email in unstructured English, IDA negotiates with them about new jobs, employing constraint satisfaction, deliberation and volition, eventually assigning a job constrained by both human and organizational requirements. The IDA software agent is currently up and running, and has matched the performance of the Navy’s human detailers.

The IDA model includes the architecture and mechanisms of both the computational model, the currently implemented and running portions, and the overarching conceptual IDA model, some of which is yet to be implemented. The IDA model implements Baars’ global workspace theory of consciousness (1988, 1997, 2002), and is compatible with a number of other psychological theories (Baddeley 1993; Ericsson & Kintsch 1995; Gray 1995; Glenberg 1997; Barsalou 1999; Sloman 1999; Conway 2001).

Most computational models of cognitive processes are designed to predict experimental data. When successful, their structure and parameters serve to explain these data. These models are typically narrow in scope, though a few attempt to provide a single, unified mechanism for cognition (see for example Laird et al. 1987 or Anderson 1990).

IDA, on the other hand, is an intelligent software agent (Franklin and Graesser 1997) designed and developed for practical personnel work for the U. S. Navy. While the IDA model was not initially developed to reproduce experimental data, it is based on psychological theories, and does generate hypotheses and qualitative predictions. But why should one give any credence to hypotheses derived from the IDA model? One argument is that IDA successfully implements much of global workspace theory, and there is a growing body of empirical evidence supporting that theory (Baars 2002, Dehaene et al 2003). IDA’s flexible cognitive cycle has also been used to analyze the relationship of consciousness to working memory at a fine level of detail, offering explanations of such classical working memory tasks as the “phonological loop” to account for the rehearsal of a telephone number (Baars and Franklin 2003). There’s also evidence from neurobiology in the form of “…hemisphere-wide, self-organized patterns of perceptual neural activity…” recurring aperiodically at intervals of 100 to 200 ms (Freeman 2003a, Freeman 2003b, Lehmann et al 1998, Koenig, Kochi and Lehmann 1998). In the present paper, hypotheses from the IDA model will be used to analyze several major findings of the existing memory literature in relation to conscious cognition (see the section on Basic Facts above.) Other supporting evidence related to the frame-rate is mentioned below, early in the section on IDA’s cognitive cycle and the memory hypothesis.

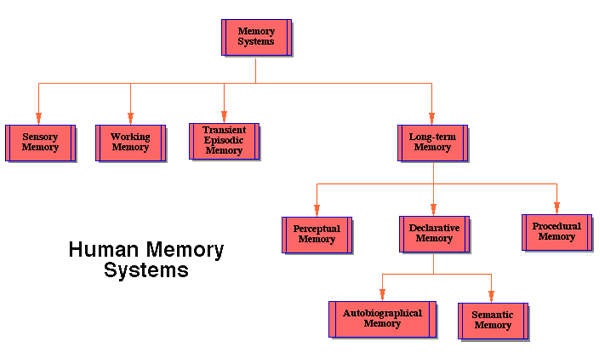

In this section, we will briefly discuss the various human memory systems that will play a role in the rest of the article. It will be helpful to the reader to specify here how we plan to use the various terms, as there isn’t always agreement in the literature. Figure 1 displays some of the relationships between the memory systems we’ll discuss.

Sensory memory holds incoming sensory data in sensory registers and is relatively unprocessed. It provides a workspace for integrating the features from which representations of objects and their relations are constructed. There are different sensory memory registers for different senses: iconic (visual), echoic, haptic, and likely a separate sensory memory for integrating multimodal information. Sensory memory has the fastest decay rate, measured in hundreds of milliseconds.

Working memory is the manipulable scratchpad of the mind (Miyake and Shah 1999). It holds sensory data, both endogenous (for example, visual images and inner speech) and exogenous (sensory), together with their interpretations. Its decay rate is measured in seconds. Again, there are separate working memory components associated with the different senses, the visuo-spatial sketchpad and the phonological loop for example (Baddeley 1993; Franklin & Baars 2003). Also, there are long-term processing components of working memory (Ericsson and Kintsch 1995). Baars & Franklin (2003) have suggested that conscious input, rehearsal, and retrieval are necessary for the normal functions of working memory.

Figure 1. Human Memory Systems

Episodic or autobiographical memory is memory for events having features of a particular time and place (Baddeley, Conway and Aggleton 2001). This memory system is associative and content-addressable.

An unusual aspect of the IDA model is transient episodic memory (TEM), an episodic memory with a decay rate measured in hours. Though often assumed (Panksepp 1998, p 129, assumes a “transient memory store”), the existence of such a memory has rarely been explicitly asserted (Donald 2001; Conway 2001; Baars and Franklin 2003). It will play a major role in the hypotheses about memory systems generated by the IDA model.

Humans are blessed with a variety of long-term memory types that decay exceedingly slowly, if at all. Memory researchers typically distinguish between procedural memory, the memory for motor skills including verbal skills, and declarative memory. In the IDA model, declarative memory (DM) is composed of autobiographical memory, described in a previous paragraph, and semantic memory, memories of fact or belief typically lacking a particular source with a time and place of acquisition. In contrast, semantic memories have lost their association with their original autobiographical source. DM is a single system within the IDA model. These declarative memory systems are accessed by means of specific cues from working memory. The IDA model hypothesizes that DM decays inversely with the strength of the memory traces (see Figure 5 for the general shape of the curve).

Though “perceptual memory” is often used synonymously with “sensory memory,” we follow Taylor and use the term differently (1999 p. 29). Like semantic memory, perceptual memory is a memory for individuals, categories, and their relations. The IDA model distinguishes between semantic memory and perceptual memory (PM) and hypothesizes distinct mechanisms for each. According to the model, PM plays the major role in recognition, categorization, and more generally the assignment of interpretations, for example the recognition of situation. Upon presentation of features of an incoming stimulus, PM returns interpretations. The content of semantic memory is hypothesized to be a superset of that of PM. All this discussion essentially restates the most controversial part of our Perceptual Memory Hypothesis, the claim of distinct mechanisms for PM and semantic memory. Several types of evidence, of varying degrees of persuasiveness, support this dissociation.

We speculate that perceptual memory is evolutionarily older than TEM or declarative memory. The functions of perceptual memory, the recognition of food items, nest-mates, prey, predators, potential mates, etc. seems almost universal among animals including insects (Beekman et al 2002), fish (Kelley and Magurran 2003) and crustaceans (Zulandt Schneider et al 2001). Even mollusks learn to recognize odors (Watanabe et al 1998). While common (perhaps universal) in mammals (Chrobak and Napier 1992) and birds (Clayton et al 2000), and conjectured for all vertebrates (Morris 2001), the functions of episodic memory, memories of events, seem beyond the reach of most invertebrates (Heinrich 1984). This suggests that PM in humans may be evolutionarily older than TEM in humans, making it likely, though not at all certain, that they have different neural mechanisms. Since the contents of TEM consolidate into DM, which contains semantic memory, these facts suggest the possibility of separate mechanisms for PM and semantic memory.

One can also argue from the results of developmental studies. Infants who have not yet developed object permanence (TEM) are quite able to recognize and categorize. This argues, though not conclusively, for distinct systems for PM and semantic memory. In this same direction, Mandler distinguishes between perceptual and conceptual categorization in infants, the latter being based upon what objects are used for (2000) (see also Glenberg 1997). The IDA model would suggest PM involvement in perceptual categorization, while semantic memory would play the more significant role in conceptual categorization [2]. IDA also employs distinct mechanisms for PM and SM.

Yet another line of empirical evidence comes from experiments with amnesiacs such as H.M. Pattern priming effects involving recognition of letters, words, shapes and objects have been demonstrated that are comparable to the capabilities of unimpaired subjects (Gabrieli et al 1990). These studies suggest that H.M. can encode into PM (recognition) but not into DM (no memory of having seen the original patterns), including semantic memory. These results are consistent with, and even suggest, distinct mechanisms.

Additional support for the dissociation of PM and semantic memory come from another study of human amnesics (Fahle and Daum. 2002). A half-dozen amnesic patients learned a visual hyperacuity task as well as did control groups though their declarative memory was significantly impaired.

Our final, and quite similar, line of argument for distinct PM and semantic memory mechanisms come from studies of rats in a radial arm maze (Olton, Becker and Handelman 1979). With four arms baited and four not (with none restocked), normal rats learn to recognize which arms to search (PM) and remember which arms they have already fed in (TEM) so as not to search there a second time. Rats with their hippocampal systems excised lose their TEM but retain PM, again suggesting distinct mechanisms.

In the recognition memory literature dual-process models have been put forward proposing that two distinct memory processes, referred to as familiarity and recollection, support recognition (Mandler 1980, Jacoby and Dallas 1981). Familiarity allows one to recognize the butcher in the subway acontextually as someone who is known, but not to recollect the context of the butcher shop. In the IDA model, PM alone provides the mechanism for such a familiarity judgment, while both PM and DM are typically required for recollection. Recent brain imaging results from cognitive neuroscience support a dual-process model (Rugg and Yonelinas 2003), and so are compatible with our Perceptual Memory Hypotheses. (For an analysis, see the section of Recognition Memory below.)

Table 2 lays out the correspondence between the different types of memory discussed, their implementation, or planned implementation, in IDA, and some hypotheses concerning their neural basis. Sensory memory, procedural memory, perceptual memory, working memory, and transient episodic memory are each implemented in the IDA model by a distinct mechanism, while the two forms of declarative memory use yet another (single) mechanism. These different mechanisms were chosen to facilitate the implementation of the distinct roles they play in cognition according to the IDA model. We hypothesize that they each have distinct neural implementations in the brain, though not necessarily in different anatomical structures.

There is some evidence from neuroscience in support of various elements of these hypotheses. As mentioned above, experiments with rats learning to search properly for food in an eight-armed maze support the role of the hippocampal system in transient episodic memory, but not in perceptual memory (Olton, Becker and Handelman 1979). Also, an eye blink in response to a tone can be classically conditioned without appeal to the hippocampus if the unconditioned stimulus (puff of air) immediately follows the conditioned stimulus (tone). If the unconditioned stimulus is delayed, the hippocampus (transient episodic memory) is needed (Lavond, Kim and Thompson 1993). fMRI studies indicated dissociation between possible neural correlates of PM and TEM. “Engagement of hippocampal and parahippocampal computations during learning correlated with later source recollection, but not with subsequent item recognition. In contrast, encoding activation in perirhinal cortex correlated with whether the studied item would be subsequently recognized, but failed to predict whether item recognition would be accompanied by source recollection.” (Davachi, Mitchell & Wagner 2003)

|

Memory type |

Implementation in IDA |

Neural Correlates |

|

Sensory Memory |

Perceptual Workspace |

Temporal-prefrontal Neocortex (echoic) (Alain Woods & Knight 1998) |

|

Procedural Memory |

Codelets |

|

|

Perceptual memory |

Slipnet |

Perirhinal Cortex (Davachi, Mitchell & Wagner 2003) |

|

Working Memory |

Workspace |

Temporo-parietal and frontal lobes (Baddeley 2001) |

|

Transient Episodic Memory |

Sparse Distributed Memory with “I don’t care”(*) and decay |

Hippocampal System (Shastri 2001, 2002; Panksepp 1998. p 129) |

|

Declarative Memory |

Sparse Distributed Memory with “I don’t care” |

Neo-cortex |

(*) Kanerva’s Sparse Distributed Memory is modeled in binary space – both the address and content are binary in character. To accommodate for flexible cuing with fewer features than the actual memory trace, IDA’s TEM and DM are designed with a modified SDM that is set in ternary memory space; it has a ternary content-space: namely, 0’s, 1’s and “don’t cares” and binary addresses for the hard locations.

Table 2. Memory Implementation.

Transient episodic and declarative memories have distributed representations in IDA. There is evidence that this is also the case in the nervous system. In IDA, these two memories are implemented computationally using a modified version of Kanerva’s Sparse Distributed Memory (SDM) architecture (Kanerva 1988, Ramamurthy, D'Mello and Franklin 2004 to appear).

SDM models a random access memory that has an address space and a set of allowable addresses. The address space considered is enormous, of the order of 21000. From this space, one chooses a uniform random sample, say 220, of locations. These are called hard locations. Thus the hard locations are sparse in this address space. Many hard locations participate in storing and retrieving of any datum, resulting in the distributed nature of this architecture.

Each hard location is a bit vector [3] of length 1000, storing data in 1000 counters with a range of -40 to 40. Each datum to be written to SDM is a bit vector of length 1000. Writing 1 to a counter results in incrementing the counter, while writing a 0 decrements the counter. To write in this memory architecture, we select an access sphere [4] from the location X. Any hard location in this sphere is accessible from X. So, to write a datum to X, we simply write to all the hard locations (roughly about a 1000 of them) within X’s access sphere. This results in distributed storage. This also provides naturally for memory rehearsal – a memory trace being rehearsed can be written many times and each time to about 1000 locations.

To read/retrieve from location Y, we compute the bit vector read at Y – by assigning its kth bit the value 1 or 0, based on Y’s kth counter being positive or negative. Hence each bit of the bit vector read at Y is a majority rule decision of all the data that have been written at Y. Effectively, the read data at Y is an aggregate of all data that have been written to Y, but may not be any of them exactly. Similar to writing, retrieving from SDM involves the same access sphere concept – we read all the hard locations within the access sphere of location Y, pool the bit vectors read from all these hard locations and let each kth bit of those locations participate in a majority vote for the kth bit of Y. Effectively, we reconstruct the memory trace in every retrieval operation.

This memory can be cued with noisy versions of the original memory trace. To accomplish this effect, we employ iterated reading – first read at X to obtain the bit vector, X1. Next read at X1 to obtain the bit vector X2. Next read at X2 to obtain the bit vector, X3. If this sequence of reads converges to Z, then Z is the result of iterated reading at X. Convergence happens very rapidly in this architecture, while divergence is indicated by an iterated read that bounces randomly in this address space.

The SDM architecture has several similarities to human memory (Kanerva 1988) and provides for “reconstructed memory” in its retrieval process.

IDA functions by means of flexible, serial but overlapping cycles of activity that we refer to as cognitive cycles. The overarching hypothesis, the Cognitive Cycle Hypothesis, emerges from the IDA model. This hypothesis claims that human cognition functions by means of similar flexible cognitive cycles each taking approximately 100-200 milliseconds, but due to overlapping, potentially occurring at a rate of more than five to ten cycles per second. Citing evidence from Thompson et al. (1996) and from Skarda and Freeman (1987), Cotterill speaks of “… the time usually envisioned for an elementary cognitive event … about 200 ms” (2003). From our cognitive cycle hypothesis, it might seem reasonable to call one such cycle an elementary cognitive event. Freeman (1999) suggests that conscious events succeed one another at a “frame rate” of 6 Hz to 10 Hz as would be expected from our cognitive cycle hypothesis (see also Freeman 2003b). The rate of such cycles coincides roughly with that of other, perhaps related, biological cycles such as saccades (Steinman, Kowler and Collewijn 1990), systematic motor tremors [5], and vocal vibrato (Seashore 1967). Could these hypothesized cycles be related to hippocampal theta waves (at 6-9 Hz) with gamma activity superimposed on them (VanRullen and Koch 2003)?

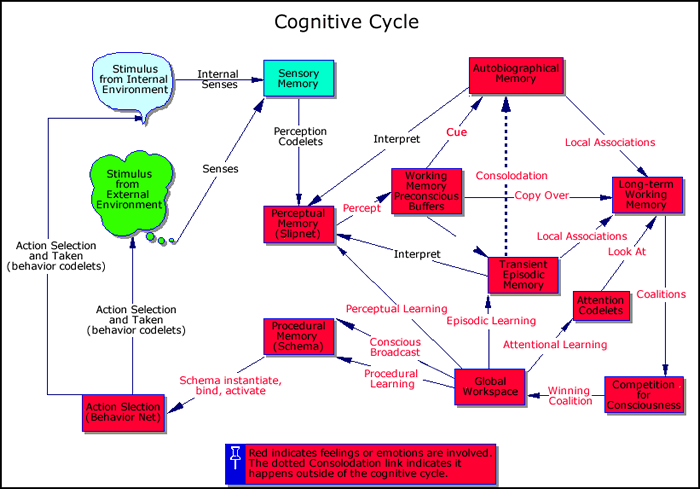

The cognitive cycle is so named because it specifies the functional roles of memory, consciousness and decision-making in cognition, according to global workspace theory. We'll next explore the cognitive cycle in detail (see Figure 2) to show the interactions between conscious events and memory systems.

Step 1: Though in humans the cognitive cycle would most often begin with an expectation-driven action pointing sensors in a certain direction, here we will begin its description with incoming sensations. In Step 1 of the cycle, sensory stimuli, external or internal, are received and interpreted by IDA’s (preconscious) perception module, where recognition and categorization take place, and interpretations are assigned. Sensory memory facilitates this process by providing a workspace within which potential interpretation structures can be constructed and evaluated (Hofstadter and Mitchell 1994). In humans, sensory memory decay time is measured in milliseconds.

Specialized feature detectors (perception codelets in IDA) descend on the incoming sensation in sensory memory. Those that find features (bits of meaning, single chunks) relevant to their specialty activate appropriate nodes in perceptual memory. The decision as to which interpretations (recognitions, categorizations, ideas, meanings) to assign is made by perceptual memory (PM). In IDA, PM is implemented by a slipnet, an activation passing semantic net. The slipnet settles into the various interpretations, represented by its nodes (Hofstadter and Mitchell 1994; Zhang et al 1998). There is recent empirical support for this kind of two part process during word recognition (Pelli, Farell and Moore. 2003). The existence of a separate, long-term, perceptual memory with its own distinct mechanism and updating procedure is a major construct of the IDA model.

Though interpretations are assigned during each cycle, the full meaning of a particular stimulus, say a sentence, may accumulate only over several cycles. The activation of nodes in PM decays, but it does so at such a rate that interpretations acquired during one cycle are still somewhat available during the next few cycles (Tanaka and Sagi 1998). Thus, we should think of this preconscious perceptual process as an iterative one.

Step 2: In Step 2, the (still unconscious) percept, containing some of the data, plus its initial interpretations, is stored in one or more of the preconscious buffers of working memory (WM) (Baddeley 1993). In humans, these buffers may include the (preconscious) visuo-spatial sketchpad, the (preconscious) phonological loop, and possibly others. The decay rate of WM in humans is measured in seconds. Its capacity is typically taken to be quite small, although we hypothesize that this limitation results from the limited capacity of consciousness rather than of the working memory buffers, ergo, the WM Limitation Hypothesis [6].

Step 3: In Step 3 of the cycle, the IDA model employs two different associative memories, each implemented, or being implemented, with a distinct, enhanced version of Kanerva’s Sparse Distributed Memory (Kanerva 1988, Anwar et al. 1999, Anwar and Franklin 2003, Franklin 2001b), and subject to different decay rates. Declarative memory (DM), including both semantic memory and autobiographical memory, decays exceedingly slowly, if at all. Decades old memories of distinctive events are routinely retrieved (Bahrick, 1984). The capacity of this memory is very large. We speak of DM, rather than of long-term memory, in order to exclude both procedural memory and perceptual memory (PM), because all three are implemented so differently in IDA. Their differences in function lead us to hypothesize that they are implemented differently neurally as well.

It is well established that, in content-addressable associative memories, interference results when similar specific events over time become merged into a general event, blurring their details (Chandler 1991, Lenhart and Freeman 1995, Lindsay and Read 1995). Thus, long-term associative memory cannot be counted on to help with the recall of where I parked my car in the parking garage this morning or what I had for lunch yesterday. These events are much too similar to a myriad of earlier such events. Yet such recall is essential for cognitive functioning. I need to know where to find my car. To solve this problem, the IDA model makes use of a transient episodic memory (TEM) implemented as a content-addressable associative memory, with a decay rate slower than that of WM but orders of magnitude faster than that of DM. For different empirical reasons, Conway postulates a similar TEM for humans, with a decay rate measured in hours or perhaps a day and a sizable capacity (2001). Donald also assumes a quite similar TEM to which he refers as intermediate-term working memory (2001), while Panksepp speaks of a “transient memory store” (1998, page 129). The existence of such a transient episodic memory in humans is a key hypothesis, the TEM Hypothesis , emerging from the IDA model. TEM is implemented in the IDA model with a version of Sparse Distributed Memory.

Figure 2. IDA’s Cognitive Cycle. In the animated version, each cycle step is highlighted for some seconds.

Using the incoming percept and the residual contents of the preconscious buffers as cues, local associations are retrieved from TEM and from DM during Step 3. These associations, together with the contents of the preconscious buffers of working memory, in humans would constitute Ericsson and Kintsch’s long-term working memory (LTWM) (1995), or Baddeley’s episodic buffer (2000), yielding another hypothesis from the IDA model. The IDA model predicts that LTWM uses working memory’s preconscious buffers with their built-in rapid decay rates. Thus, in any cycle, LTWM will contain both new local associations and those from previous cycles that haven’t yet decayed. In the IDA model, LTWM reuses the preconscious buffers of WM for its own storage and decays at the same rate as WM. We refer to these latter assertions for humans as the LTWM Hypothesis.

Step 4: In Step 4 of the cognitive cycle, (see above) we explicitly meet codelets for the first time [7]. The term being taken from the Copycat Architecture (Hofstadter and Mitchell. 1994), codelets in IDA are small pieces of code running as independent threads, each of which is specialized for some relatively simple task. They often play the role of demons [8], waiting for a particular situation to occur in response to which they should act. Codelets in IDA implement the specialized processors of global workspace theory. Codelets also correspond more or less to Jackson’s demons (1987), Minsky’s agents (1985), and Ornstein’s small minds (1986). Edelman’s neuronal groups (1987) may well implement some codelets in the brain. Codelets come in a number of varieties each with different functions to perform, as we will see. Perceptual codelets would have already participated in Step 1 above. Behavior codelets, as well as behaviors comprised of them, and behavior streams of behaviors will appear in Steps 7 and 8 below.

The task of attention codelets in IDA is to bring content to consciousness. In humans, this content may include constructed sensory images, feelings, emotions, memories, intuitions, ideas, desires, goals, etc. Among these attention codelets are expectation codelets, spawned by active behaviors (see below) that attempt to track the results of current actions or the lack thereof. Another example of an attention codelet is an intention codelet, one of which is spawned each time a volitional goal is selected. An intention codelet attempts to bring to consciousness any content that would be relevant to its goal (Franklin 2000b) In Step 4 of the cycle, these attention codelets view LTWM. Motivated by novelty, unexpectedness, relevance to a goal, emotional content, etc., some of these attention codelets gather information codelets, form coalitions with them, and actively compete to bring their contents to consciousness (Bogner 1999; Bogner, Ramamurthy and Franklin 2000a; Franklin 2001b). Attention codelets are activated in proportion to the extent that their preferences are satisfied. This competition for consciousness may also include coalitions formed by attention codelets from a recently preceding cycle. The activation of such previously unsuccessful coalition members decays, making it more difficult, but not impossible, for their coalition to compete with newer arrivals. Thus, we have a recency effect. The contents of unsuccessful coalitions remain in the preconscious buffers of LTWM until they decay away, and can take part in future competitions for consciousness.

IDA’s mechanism for the competition for consciousness agrees quite nicely with Gray’s neuroscience-based comparator model, in which top-down and bottom-up processes interact to produce the contents of consciousness (1995, 2002). Attention codelets, and in particular the expectation codelets, constitute the top-down processes, while the information codelets from LTWM account for the bottom-up processes. Attention codelets are top-down in the sense that they are often instantiated by IDA’s action selection mechanism, that is, by goal-context hierarchies. Information codelets are bottom-up in that they carry information derived from incoming exogenous or endogenous stimuli or from some of the various forms of memory, WM, TEM, and DM.

Within a single cognitive cycle, the bringing of contents to consciousness is automatic. Existing attention codelets form coalitions and compete for access to the global workspace, i.e., to consciousness. All this is automatic. However, over multiple cycles, the IDA model allows for conscious, voluntary attention. For example, while I am writing the phone rings. Shall I answer it or let the machine screen the call? A voluntary decision is taken to answer the call (Franklin 2000b), producing an intention to direct attention to a particular incoming stimulus. Each such voluntary decision spawns an attention codelet, in this case one that, likely, will bring the intention to consciousness, setting in motion the instantiation of a goal context hierarchy intent on answering the phone. (See Steps 6 and 7 below for details on how this happens.)

Step 5: In Step 5 of the cognitive cycle, a single coalition of codelets, typically composed of one or more attention codelets and their covey of relevant information codelets, wins the competition, gains access to the global workspace, and has its contents broadcast to all the codelets in the system. This broadcast is hypothesized to be a necessary condition for conscious experiences as reported in scientific studies (Baars, 1988, 2002). This “global access” broadcast step embodies the major hypothesis of global workspace theory, the linking of the broadcast with conscious cognition , which has been supported by a number of recent neuroscience studies (Dehaene 2001; Dehaene and Naccache 2001; Baars 2002; Cooney and Gazzaniga 2003).

The IDA model leads us to hypothesize that the conscious broadcast also results in the current contents of consciousness being stored in transient episodic memory. The model also claims that, at recurring times not part of a cognitive cycle, the undecayed contents of transient episodic memory are consolidated into long-term declarative memory. This suggests in humans a location of TEM in the hippocampal system (Shastri 2001, 2002).

In addition to broadcasting the contents of consciousness to the processors (codelets) in the system and storing them in TEM, the IDA model hypothesizes a third event occurring during Step 6 of the cognitive cycle. Perceptual memory (the slipnet and its perceptual codelets in IDA) is updated using information from the contents of consciousness. Details of this process will be discussed in the section entitled “The Learning of Interpretations” to be found below.

Also, the IDA model motivates us to hypothesize that earlier actions are shaped by reinforcement derived from the current contents of consciousness. This updating of procedural memory will be described in the section entitled “Procedural Learning” to be found below.

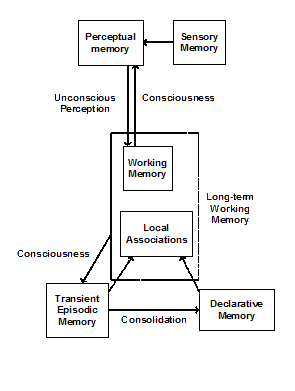

The IDA model suggests the following picture of the relationships between the various types of memory we have discussed (see Figure 3). A preconscious percept consisting of a selection of the contents of sensory memory, together with recognitions, categorizations and other interpretations produced in PM, are stored to WM. Only the conscious portion of the contents of WM (actually LTWM) is stored in TEM. Information from the same conscious content is used to update PM, TEM, and procedural memory. The undecayed contents of TEM are consolidated into DM at some other time. Retrieval from the content-addressable, associative TEM and DM memories uses recently stored unconscious contents of WM as cues. A consequence of this account is that contents can only be stored in DM via consciousness acting through TEM. Another is that new interpretations are learned in PM only via updating from conscious contents. Yet another is that skills are shaped only via conscious intervention.

Thus, a primary function of consciousness is to store relevant information in PM and in DM, and to shape skills in procedural memory. For this purpose, the system employs a sequence of filters. The first filter selects from the internal and/or external sensory stimuli, and the interpretations derived from them to produce the preconscious percept. This process involves sensory memory and PM [9]. The second filter uses this percept and the residual content of WM to select local associations from the vast store in DM and from the very much lesser store in TEM. The third filter selects the next contents of consciousness from among the various competing coalitions – considering relevance, importance, urgency, insistence, etc.

The remaining three steps of the cognitive cycle impact this account of what the IDA model has to say about memory by producing the actions that are shaped using conscious contents from subsequent cycles.

Step 6: In Step 6 of the cycle, the recruiting function of consciousness, the central hypothesis of global workspace theory, is implemented. Relevant behavior codelets respond to the conscious broadcast. These are typically codelets whose variables can be bound (assigned a value) from information in the conscious broadcast. If the successful attention codelet(s) was an expectation codelet announcing an unexpected result from a previous action, the responding codelets may be those some of whose actions can help to rectify the unfulfilled expectation.

Step 7: In Step 7, some responding behavior codelets instantiate appropriate behavior streams (goal context hierarchies), if suitable ones are not already in place (Negatu & Franklin 2002). They also bind variables, and send activation to behaviors. Here, we assume that there is such a behavior codelet and behavior stream. If not, then non-routine problem solving is called for, using additional mechanisms involving the execution over multiple cycles of a partial planning algorithm (Gerevin and Schuber 1996) implemented as a behavior stream. (This part of the IDA conceptual model is, as yet, unpublished.)

Figure 3. Relationships between some of the various memory systems

Step 8: In Step 8, the behavior net (Negatu & Franklin 2002) chooses a single behavior and executes it. This choice may come from the just instantiated behavior stream or from a previously active stream. The choice is affected by internal motivation (activation from drives and goals as implemented by feelings and emotions); by the current situation, external and/or internal (environmental activation); by the relationship between the behaviors (the connections in the behavior net) and by the activations of the various behaviors. (The pervasive influence of feelings and emotions on the various memory systems and on action selection is another part of the IDA conceptual model (Franklin and McCauley 2004.)

Step 9: Action is taken in Step 9, the final step of the cycle. The execution of a behavior results in the behavior’s underlying behavior codelets performing their specialized tasks, which may have external or internal consequences. This part of the process is IDA taking an action. The acting codelets also include at least one expectation codelet (see Step 4) whose task it is to monitor the action taken, and to try and bring its results to consciousness, particularly any failure.

Though semantic memory, a part of declarative memory (DM), and perceptual memory (PM) have quite similar content, their functions and processes are different. In the IDA model, PM is implemented with an activation-passing semantic net called a slipnet (Hofstadter and Mitchell 1994), while DM is implemented using an expanded version of sparse distributed memory (Kanerva 1988; Anwar, Dasgupta and Franklin 1999; Anwar and Franklin 2003). These distinct mechanisms were chosen to reflect the respective different functions of each type of memory. DM is accessed in Step 3 of the cognitive cycle to retrieve local associations with the current contents of the WM buffers. PM, on the other hand, is the critical element in the process of assigning interpretations to sensory input in Step 1. These quite distinct functions lead us to hypothesize distinct neural mechanisms for DM and PM in humans.

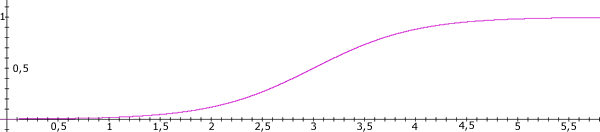

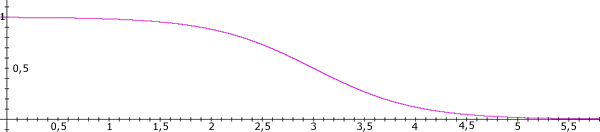

Figure 4. Base-level activation curve of slipnet nodes. This and other such figures are included only for the general shape of the curve. The units are arbitrary.

PM is centrally involved in the processes of recognition, categorization, and concept formation. In the IDA model, recognition, categorization and the assignment of other interpretations happens in the slipnet. In Step 1 of the cognitive cycle, perceptual codelets search incoming stimuli, internally or externally generated, for features of interest to their special purpose. Those finding such features activate appropriate nodes in the slipnet. Activation in the slipnet spreads from node to node along relationship links. Eventually, the slipnet’s state settles into a relatively stable pattern of activity. Nodes that are highly active at this point provide the interpretations assigned to the incoming stimuli. In Step 2 of the cognitive cycle, these interpretations (recognitions, categorizations, and others), together with some of the incoming data, are stored into WM buffers. The slipnet implements PM in the IDA model.

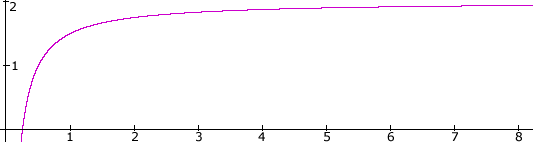

We’ve just described the process by which information is retrieved from PM in the IDA model. How is it stored (Gilbert, Sigman, and Crist. 2001)? To answer this question, we need more detailed knowledge of the structure of the slipnet. Each node has a base-level activation analogous to the at-rest firing rate of a neuron. Additional activation provided by perceptual codelets, or spread from other nodes, is added to this base-level activation. The base-level activation of a slipnet node increases along a saturating, sigmoidal curve as the number of occurrences in consciousness of the node’s interpretations increases (see Figure 4). The node’s base-level activation decays at a rate inversely proportional to its magnitude. A low base-level activation decays quite rapidly, while a saturated base-level activation decays almost not at all (see Figure 5).

Links in the slipnet model relationships between nodes, concepts. Each link carries a weight that acts as a multiplier of activation spreading over the link. Similar to base-level activation of nodes, the weights on link increase along a saturating, sigmoidal curve according to the number of occurrences of its relationship in the contents of consciousness (see Figure 4). Also, the weight of the link decays inversely to its magnitude with a saturated weight decaying almost not at all (see Figure 5).

Figure 5. The decay curve of a slipnet node

Recall that Step 5 of each of IDA’s cognitive cycles implements the broadcast postulated by global workspace theory. PM is updated simultaneously with the broadcast. The base-level activation of a slipnet node is increased whenever it represents an interpretation that appears in the broadcast contents of consciousness. Similarly, the weight on any slipnet link corresponding to a relationship appearing in the broadcast contents of consciousness is increased. In this way, IDA’s PM learns slowly with experience. In humans, with consciousness in cognitive cycles occurring roughly five times a second, this incremental learning may not be so slow.

The previous paragraph describes the updating of existing nodes and links. But are such nodes and links originally learned and, if so, how? The general outline of how this is accomplished is as follows. An attention codelet succeeds in bringing to consciousness a new individual, a recent instance of a new category, or some other form of a new concept. The absence of an existing node for this concept triggers a mechanism that produces an appropriate new node within the slipnet. Each relationship of the new concept with another individual, category or other concept within the current contents of consciousness triggers the generation of a new link between their respective nodes in the slipnet.

A new node begins with a tiny base-level activation level; a new link begins with a tiny weight. Thus a new node or link is likely to decay away unless it receives reinforcement from the contents of consciousness during subsequent cognitive cycles. Should one, for example, be introduced to a new person at a party, shake hands, exchange a greeting and move on, the new node for the person just met would have come to consciousness only a dozen or so times, not producing much in the way of base-level activation. On the other hand, a ten-minute conversation with the new acquaintance might result in some three thousand appearances in subsequent conscious contents, resulting in a much higher base-level activation. In the first case, a sighting of the new person a few weeks later might result in no recognition at all. In the second case, recognition should occur and bring with it memories of the conversation.

One might reasonably ask at this point about the role of perceptual codelets in the learning of interpretations. Just as Edelman postulates a primary repertoire of neuronal groups (1987), we propose a primary repertoire of perceptual codelets evolved into natural systems and built into artificial systems. As do behavior codelets (see the next section), perceptual codelets have base-level activation that affects the total activation they can pass to appropriate nodes after noting the particular features with which they are concerned. Each conscious broadcast leads to the updating of these base-level activations just as happens for behavior codelets. In this way, degeneracy [10] plays a role. Each broadcast also results in appropriate associations between perceptual codelets and newly added nodes in perceptual memory.

One had best not think of IDA’s slipnet implementation of PM as modeling neurons and their connections. A node would not correspond to a neuron. Nor do we think it would correspond to a collection of neurons. Perhaps it would be best to think of the slipnet as modeling, in the dynamical system sense, an attractor landscape with nodes corresponding to attractors and links to their boundaries. The well-known decades of difficulty computer scientists have had with pattern recognition leads us to believe that only a dynamical systems approach is likely to be successful in the real world. This approach has certainly been successful in modeling classification by means of olfaction in rabbits (Skarda and Freeman 1987; Freeman and Skarda 1990; Freeman and Kozma. 2000).

Our account of procedural learning is based on consciousness providing reinforcement to actions. Global workspace theory has actions performed by special purpose processors. These are implemented in the IDA model by behavior codelets. We take them as neurally corresponding to neuronal groups (Edelman 1987). Edelman postulates an organism being born with an initial repertoire of neuronal groups. An initial set of primitive actions, implemented by primitive codelets or some such, is a computational necessity for any autonomous agent, natural or artificial, software or robotic (Franklin 1997).

In the IDA model codelets have preconditions that must be satisfied in order that the codelet can act. They also have post-conditions that are expected after the actions take place. Each codelet also has a numerical activation that roughly measures its relevance and importance to the current situation. Part of this activation is base-level activation similar to that described for slipnet nodes above. Behavior codelets acquire environmental activation during Step 7 of each cognitive cycle (see above) in proportion to their preconditions being satisfied. In that same step, a behavior codelet may receive activation if its post-conditions help to satisfy some goal or drive as implemented by an emotion or feeling.

Collections of primitive behavior codelets form behaviors. This corresponds to collections of processors forming goal contexts in global workspace theory. Behaviors also have pre- and post-conditions derived from those of their underlying codelets. The activation of a behavior is always the sum of the activations of its codelets. Thus, behaviors acquire activation for their codelets. They may also receive activation from other behaviors that they must share with their codelets.

Collections of behaviors, called behavior streams, with activation passing links between them, correspond to goal context hierarchies in global workspace theory. They can be thought of as partial plans of actions. Also during Step 7, behavior streams are instantiated into an on going, activation passing, behavior net. In Step 8 of each cycle, the behavior net chooses exactly one behavior to execute. The behavior net is IDA’s action selection mechanism. More details of the operation of this action selection mechanism have been published elsewhere (Negatu and Franklin 2002).

Our account also employs Edelman’s notion of degeneracy, postulating that several different neuronal groups can accomplish roughly the same task. In the IDA conceptual model, the repertoire of behavior codelets is also taken to be degenerate. Thus, two distinct behavior streams may be partial plans with the same goal, but may employ different behaviors to get there. Procedural learning in the IDA conceptual model is the consciously driven process of shaping a behavior stream via reinforcement.

According to the IDA model, procedural learning occurs according to the following scenario. In one cognitive cycle, a behavior is selected for execution by IDA’s behavior net. The codelets that comprise that behavior all become active, including at least one expectation codelet concerned with bringing to consciousness the results of the actions taken by the behavior’s behavior codelets. Typically, the results of some of these actions will be brought to consciousness by one of these expectation codelets during a subsequent cycle. During Step 5, the broadcast step of that cycle, the base-level activations of the responsible behavior codelets are adjusted upward or downward by an internally generated reinforcement signal, according as to whether the action was successful or not, as determined by the expectation codelet. The adjustment is made by means of a curve such as that in Figure 6 using the following procedure. Find the point on the curve whose y-value is the current base-level activation of the behavior codelet. According to whether the action succeeded or failed, extend a horizontal line segment of unit length to the right or left of the point. (The unit is an adjustable parameter.) A vertical line through the endpoint of this segment intersects the curve at a point whose y-value is the new base-level activation.

Following this reinforcement procedure, successful behavior codelets with low base-level activation increase their activation rapidly. They learn quickly. Unsuccessful behavior codelets with low base-level activation decrease their activation even more rapidly. This learning on the part of a behavior codelet is done in the context of its current behavior (goal context) and behavior stream (goal context hierarchy). The change in the behavior codelet’s base-level activation can be expected to change the likelihood of its behavior stream being instantiated in a future similar situation, and to change the likelihood of its behavior being executed. Learning in context will have occurred. For example, in the case of an unsuccessful behavior codelet, a similar behavior stream with a behavior like the original, but with the unsuccessful codelet replaced with another with similar results, may be chosen the next time. Degeneracy is required here.

The learning procedure just described is part of the IDA conceptual model that has not yet been implemented computationally.

Figure 6. Procedural Learning Curve

It is well known that people tend to overestimate the frequency of divorce if they can quickly recall instances of divorced acquaintances. This principle also applies to frequency estimates, and is referred to as the availability heuristic (Kahneman, Slovic and Tversky 1982; Fiske and Taylor 1991). An online demonstration of the heuristic (Colston and Walter. 2001) asks the subject to review a list of names of well-known people, one such presented at each mouse click, to see if the subject knows them. No mention is made of gender. After viewing the last name in the list, the subject is presented a forced choice as to whether he or she had seen more men’s names or women’s. Since the men named tended to be more famous, and hence more easily recalled, the availability heuristic would correctly predict that most subjects would claim that there were more men’s names on the list. There are, in fact, fourteen of each. In this section, we will analyze this task using IDA’s cognitive cycle to see what the IDA model would predict for a human subject.

The initial instructions comprise a text of some thirty-seven words. To read and understand the instructions will likely occupy a subject for a few seconds and some few tens of cognitive cycles. During the last of these cycles, the gist of the meaning of the instructions will have accumulated in the appropriate preconscious working memory buffer. The conscious broadcast of these meanings will likely instantiate a goal context hierarchy for sequentially clicking through and seeing the names, noting whether they are recognized. The action chosen during this cycle will likely be a mouse click bringing up the first name.

One to three or four cycles will likely suffice to preconsciously perceive the entire name, which will have accumulated in the appropriate preconscious working memory buffer. Here we must consider two cases: 1) The name has been recognized during the preconscious perceptual process (see the description of perceptual memory above), or 2) it has not. Recognition of the name John Doe has occurred if the subject can answer the question “who is John Doe?” In the first case, after the conscious broadcast the behavior net will likely choose a mouse click as an action. This would bring up another name. In the second case, a conscious goal to consult declarative memory in search of recognition would likely arise over several cognitive cycles (see major hypothesis 7 on voluntary memory retrieval above.) Such a conscious goal would produce an attention codelet on the lookout for information concerning the as yet unrecognized name. In Step 3 of the next cycle, the name, by then located in a preconscious working memory buffer, will be used to cue declarative memory. This voluntary memory retrieval process may iterate over several additional cycles, with the parts of local associations that make it to consciousness contributing to the cue for the next local associations. The subject will eventually recognize the name or will give up the effort on a subsequent cycle. In either case, the action chosen on this last cycle is likely to be a mouse click for the next name.

Thus the subject will work his or her way through the list of names, recognizing many or most of them but missing some. At the mouse click following the last name, a new set of instructions appears. These instructions, in sixteen words, ask the subject to decide whether more men’s or women’s names were on the list, and to click the mouse when the decision is made. A very few tens of cycles are spent understanding the instructions.

The conscious broadcast whose contents contain the full understanding of the instructions will likely recruit behavior codelets that instantiate a goal context hierarchy to comply. During Step 8 of some subsequent cycles, behaviors (goal contexts) will likely be selected that will attempt to query transient episodic memory (TEM), starting the recall process for names on the list. The behavior’s codelets will write this goal to preconscious buffers of working memory (WM) where it will serve to cue local associations from TEM and declarative memory (DM). The next contents of consciousness will be chosen from the resulting long-term working memory (LTWM).

This process can be expected to continue over many subsequent cycles, with each cue from WM containing material from previous local associations from DM. Since the names were encoded in TEM as distinct events, those retrieved from TEM can be expected to appear as such in LTWM when associated with the latest cue. From there, each recovered name is likely to appear as the central content of its own coalition of attention and information codelets competing for consciousness. Thus, the IDA model would predict that only one name would be recalled at a time, since a single coalition must win the competition. More famous names would have their initial coalitions replaced during subsequent cycles, with expanded coalitions including information from their many local associations in DM. Thus, they would accrue an advantage in the next competition for consciousness; such that more famous names are more likely to be recalled. A name on the list could fail to be recalled for either of two reasons. One, it might not have been retrieved from TEM by any of the cues used during the process. Or, it may have been retrieved into LTWM, but decayed away before it could be part of a coalition that won the competition for consciousness.

At some point in this process, a decision as to more men’s names or more women’s is taken. How does that happen? Some subjects may actually instantiate a goal context hierarchy (behavior stream) that keeps separate tallies in WM of the number of men’s names and of women’s names recalled. These tallies are likely to be part of the conscious contents in at least some of the ongoing cycles in the process. When no more names are being recalled, a goal context (behavior) to decide is chosen. The decision is then made and, on a subsequent cycle, the mouse is clicked.

We think this is a relatively unlikely scenario, in that most subjects will not keep such explicit running tallies. Rather, they’ll decide on the basis of a fringe consciousness feeling that one gender or the other has been recalled more often (Mangan 2001). In the IDA model, such feelings are to be implemented as fringe attention codelets. In this case, a selected behavior would give rise to two such fringe attention codelets, one for each gender. As a name from one gender is recalled, the activation of the corresponding fringe attention codelet is increased. Each of these fringe attention codelets likely enters each competition for consciousness as the process progresses. The stronger of the two will be able to win only after names are no longer being recalled; a coalition with a name to be newly recalled would simply have a higher average activation. Such fringe consciousness feelings are easily defeated.

Thus the IDA model predicts the experienced outcome of this demonstration of the availability heuristic, and supports the commonly given, functional explanation. What the model adds is a hypothesized detailed mechanism for its functional process.

Our example of an analysis of a process dissociation experiment using the IDA cycle will require some understanding of how the IDA model handles voluntary action selection (Franklin 2000b; Kondadadi and Franklin. 2001). In this section, we provide a brief summary of that process.

We humans most often select actions subconsciously, that is, without conscious thought. But we also make voluntary choices of action. Baars argues that such voluntary choice is the same as conscious choice (1997, p. 131). We must carefully distinguish between being conscious of the results of an action and consciously deciding to take that action, that is, of consciously deliberating on the decision. It’s the latter case that constitutes voluntary action. William James proposed the ideomotor theory of voluntary action (James 1890). James suggests that any idea (internal proposal) for an action that comes to mind (to consciousness) is acted upon unless it provokes some opposing idea or some counter proposal. GW theory adopts James’ ideomotor theory “as is” (Baars 1988, Chapter 7) and provides a functional architecture for it. The IDA model furnishes an underlying mechanism that implements the ideomotor theory of volition and its architecture in a software agent.

Suppose that in the experiment described in the previous section, the subject has reached the stage of deciding upon a more frequent gender. The players in this decision making process include proposing and objecting attention codelets and a timekeeper codelet. A proposing attention codelet’s task is to propose a certain decision, in this case a gender. Choosing a decision to propose on the basis of the codelet’s particular pattern of preferences (in this case for one gender), the proposing attention codelet brings information about itself and the proposed decision to “consciousness” so that the timekeeper codelet and others can know of it. Its preference pattern may include several different issues with differing weights assigned to each, although that doesn’t happen in our example. If no other objecting attention codelet objects (by bringing itself to “consciousness” with an objecting message), and if no other proposing attention codelet proposes a different job within a given span of time, the timekeeper codelet will decide on the proposed decision. If an objection or a new proposal is made in a timely fashion, the timekeeper codelet stops timing or resets the timing for the new proposal.

Two proposing attention codelets may alternatively propose the same two jobs several times. Several mechanisms tend to prevent continuing oscillation. Each time a codelet proposes the same job, it does so with less activation and, so, has less chance of coming to “consciousness.” Also, the timekeeper loses patience as the process continues, thereby diminishing the time span required for a decision.

The IDA model also serves as a tool with which to analyze and qualitatively predict and/or explain the results of psychological experiments. Here, we offer as an example an analysis of a well-known process dissociation experiment designed to measure conscious vs. unconscious memory (Jacoby 1991). After having been given a list of twenty or so two-syllable words to study one at a time for a few seconds each, subjects were tested under an inclusion and under an exclusion condition. When given the first syllable of a word, a subject was asked under the inclusion condition to complete the word with one on the list, if possible, and to guess otherwise. Under the exclusion condition, the subject was to complete the word while avoiding those words previously seen on the list. Errors during the exclusion condition illustrated unconscious (implicit) memory, i.e. the unconscious influence of previously seen, but not consciously remembered, words. Such errors were made at above chance levels. Can the IDA model account for these results?